The mDOT Center

Transforming health and wellness via temporally-precise mHealth interventions

mDOT@MD2K.org

901.678.1526

901.678.1526

Collaborating Investigator:

Luis Garcia, The University of Utah

Funding Status:

NIH/NIMH

03/15/24 – 02/28/27

Associated with:

Iris Nguyen, Liying Han, Burke Dambly, Alireza Kazemi, Marina Kogan, Cory Inman, Mani Srivastava, Luis Garcia

Proceedings of the 23rd ACM Conference on Embedded Networked Sensor Systems

May 6, 2025

multimodal foundation models, embodied artificial intelligence

Akash Deep Singh, Brian Wang, Luis Garcia, Xiang Chen, Mani Srivastava

January 1, 2024

IoT privacy, RF sensors, user perception,

data inferences,

device familiarity, physical appearance, user control

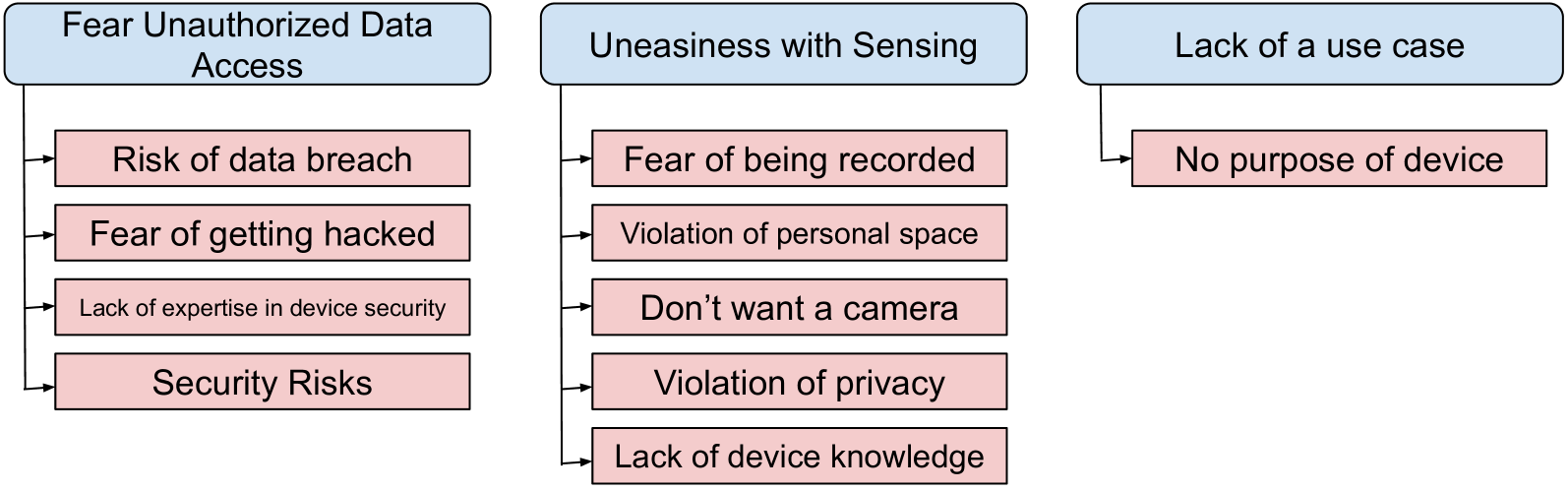

While IoT sensors in physical spaces have provided utility and comfort in our lives, their instrumentation in private and personal spaces has led to growing concerns regarding privacy. The existing notion behind IoT privacy is that the sensors whose data can easily be understood and interpreted by humans (such as cameras) are more privacy-invasive than sensors that are not human-understandable, such as RF (radio-frequency) sensors. However, given recent advancements in machine learning, we can not only make sensitive inferences on RF data but also translate between modalities. Thus, the existing notions of privacy for IoT sensors need to be revisited. In this paper, our goal is to understand what factors affect the privacy notions of a non-expert user (someone who is not well-versed in privacy concepts). To this regard, we conduct an online study of 162 participants from the USA to find out what factors affect the privacy perception of a user regarding an RF-based device or a sensor. Our findings show that a user’s perception of privacy not only depends upon the data collected by the sensor but also on the inferences that can be made on that data, familiarity with the device and its form factor as well as the control a user has over the device design and its data policies. When the data collected by the sensor is not human-interpretable, it is the inferences that can be made on the data and not the data itself that users care about when making informed decisions regarding device privacy.

This paper challenges the traditional understanding of IoT privacy, asserting that for non-expert users, the perceived invasiveness of sensors—especially non-human-understandable RF sensors—is primarily driven by the sensitive inferences that can be made from collected data, rather than just the data itself. The study highlights that user familiarity with a device, its physical appearance, and the degree of control users have over its design and data policies also significantly shape privacy perceptions. The research suggests that greater transparency about inferences and enhanced user control can foster more trust and willingness to adopt sensing devices in personal spaces.

Brian Wang, Luis Garcia, Mani Srivastava

2024 IEEE Security and Privacy Workshops (SPW)

May 1, 2024

Large Language Models (LLM), Privacy, Contextual Integrity,

Smart Environments

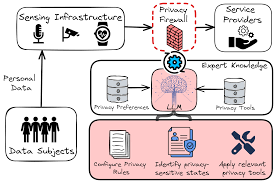

Modern smart buildings and environments rely on sensory infrastructure to capture and process information about their inhabitants. However, it remains challenging to ensure that this infrastructure complies with privacy norms, preferences, and regulations; individuals occupying smart environments are often occupied with their tasks, lack awareness of the surrounding sensing mechanisms, and are non-technical experts. This problem is only exacerbated by the increasing number of sensors being deployed in these environments, as well as services seeking to use their sensory data. As a result, individuals face an unmanageable number of privacy decisions, preventing them from effectively behaving as their own “privacy firewall” for filtering and managing the multitude of personal information flows. These decisions often require qualitative reasoning over privacy regulations, understanding privacy-sensitive contexts.

This paper introduces PrivacyOracle, a prototype system that leverages Large Language Models (LLMs) to automatically configure privacy firewalls in smart built environments. The system addresses the challenge of managing numerous privacy decisions for individuals in sensor-rich spaces by enabling automated decision-making regarding personal data flows. PrivacyOracle achieves this by performing qualitative reasoning over privacy regulations and social norms, identifying privacy-sensitive states from sensor data, and selecting appropriate data transformation tools. Evaluations show high accuracy in identifying sensitive states (up to 98%) and moderate agreement (75%) with social acceptability norms for information flows.

Ziqi Wang, Mani Srivastava, Akash Deep Singh, Luis Garcia, Zhe Chen, Jun Luo

United States Patent Application 20230288549

September 14, 2023

Ultrawideband Audio

Sensing, Impulse Radio Ultra-Wideband (IR-UWB), wireless vibrometry, sound source separation, Time-of-Flight (ToF), RF sensing, through-wall detection, static clutter suppression,

vibrating activity

localization, audio recover

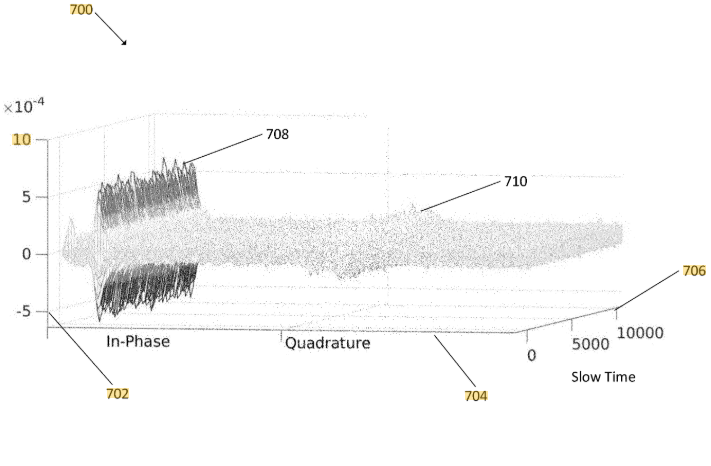

Systems and methods for simultaneously recovering and separate sounds from multiple sources using Impulse Radio Ultra-Wideband (IR-UWB) signals are described. In one embodiment, a device can be configured for generating an audio signal based on audio source ranging using ultrawideband signals. In an embodiment the device includes, a transmitter circuitry, a receiver circuitry, memory and a processor. The processor configured to generate a radio signal. The radio signal including an ultra-wideband Gaussian pulse modulated on a radio-frequency carrier. The processor further configured to transmit the radio signal using the transmitter circuitry, receive one or more backscattered signals at the receiver circuitry, demodulate the one or more backscattered signals to generate one or more baseband signals, and generate a set of data frames based on the one or more baseband signals.

This publication introduces systems and methods for Ultrawideband Audio Sensing that leverage Impulse Radio Ultra-Wideband (IR-UWB) signals to simultaneously recover and separate sounds from multiple sources. Unlike traditional microphones that blend sounds and struggle with background noise, this wireless vibrometry approach senses sound directly from source vibrations using RF signals. Key advantages include the ability to penetrate building materials for non-line-of-sight (NLOS) operation, immunity to non-target noise, and fine-grained sound source separation based on Time-of-Flight (ToF) ranging, even for sources as close as 25 cm apart. The system employs a signal processing pipeline involving phase noise correction, static clutter suppression, vibrating activity localization, and denoising to achieve robust audio recovery.

Swapnil Sayan Saha, Sandeep Singh Sandha, Luis Garcia, Mani Srivastava

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies

July 7, 2022

inertial odometry,

dead-reckoning, sequence-learning, resource-constrained devices, neural

architecture, hardware-in-the-loop, machine-learning, deep-learning, tracking

Deep inertial sequence learning has shown promising odometric resolution over model-based approaches for trajectory estimation in GPS-denied environments. However, existing neural inertial dead-reckoning frameworks are not suitable for real-time deployment on ultra-resource-constrained (URC) devices due to substantial memory, power, and compute bounds. Current deep inertial odometry techniques also suffer from gravity pollution, high-frequency inertial disturbances, varying sensor orientation, heading rate singularity, and failure in altitude estimation. In this paper, we introduce TinyOdom, a framework for training and deploying neural inertial models on URC hardware. TinyOdom exploits hardware and quantization-aware Bayesian neural architecture search (NAS) and a temporal convolutional network (TCN) backbone to train lightweight models targeted towards URC devices.

TinyOdom is a framework designed for efficient neural inertial navigation on ultra-resource-constrained (URC) devices, such as microcontrollers, enabling real-time deployment in GPS-denied environments. It addresses common issues in existing inertial odometry, including gravity pollution, high-frequency disturbances, and heading rate singularity, by using hardware and quantization-aware Bayesian neural architecture search (NAS) and a temporal convolutional network (TCN) backbone. TinyOdom also integrates a magnetometer, physics, and velocity-centric sequence learning formulation and a model-free barometric g-h filter for robust 3D altitude estimation. The framework significantly reduces the size of neural inertial models (31x to 134x) while maintaining or exceeding state-of-the-art localization accuracy, achieving errors between 2.5m and 12m in 60 seconds across various applications like pedestrian, animal, aerial, and underwater vehicle dead-reckoning.

Moustafa Alzantot, Luis Garcia, Mani Srivastava

April 25, 2022

Physiological sensor

readings, generative

models, GANs, VAEs,

time-series data,

ECG classification, human activity recognition,

synthetic data generation, data imputation, mode collapse mitigation.

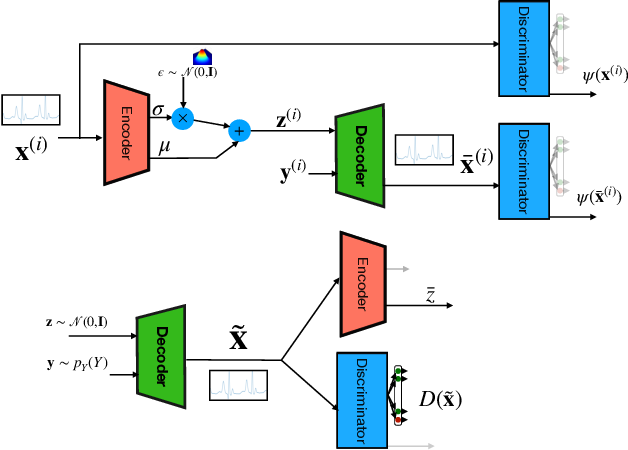

Generative models such as the variational autoencoder (VAE) and the generative adversarial networks (GAN) have proven to be incredibly powerful for the generation of synthetic data that preserves statistical properties and utility of real-world datasets, especially in the context of image and natural language text. Nevertheless, until now, there has no successful demonstration of how to apply either method for generating useful physiological sensory data. The state-of-the-art techniques in this context have achieved only limited success. We present PHYSIOGAN, a generative model to produce high fidelity synthetic physiological sensor data readings. PHYSIOGAN consists of an encoder, decoder, and a discriminator. We evaluate PHYSIOGAN against the state-of-the-art techniques using two different real-world datasets: ECG classification and activity recognition from motion sensors datasets. We compare PHYSIOGAN to the baseline models not only the accuracy of class conditional generation but also the sample diversity and sample novelty of the synthetic datasets. We prove that PHYSIOGAN generates samples with higher utility than other generative models by showing that classification models trained on only synthetic data generated by PHYSIOGAN have only 10% and 20% decrease in their classification accuracy relative to classification models trained on the real data. Furthermore, we demonstrate the use of PHYSIOGAN for sensor data imputation in creating plausible results.

PHYSIOGAN is a novel generative model that successfully produces high-fidelity, diverse, and novel synthetic physiological sensor data by combining variational autoencoders (VAEs) and generative adversarial networks (GANs). It significantly outperforms state-of-the-art techniques for conditional generation, proving its utility for downstream tasks like training classification models with only a 10% to 20% decrease in accuracy compared to real data, and effectively imputing missing sensor readings.

Renju Liu, Luis Garcia, Mani Srivastava

The Sixth ACM/IEEE Symposium on Edge Computing (ACM SEC ‘21)

December 2021

WebAssembly, IoT security, access control, bare-metal devices, multi-tenancy, sandboxing, resource

management, sensor & actuator access, software security engineering,

mobile platform security

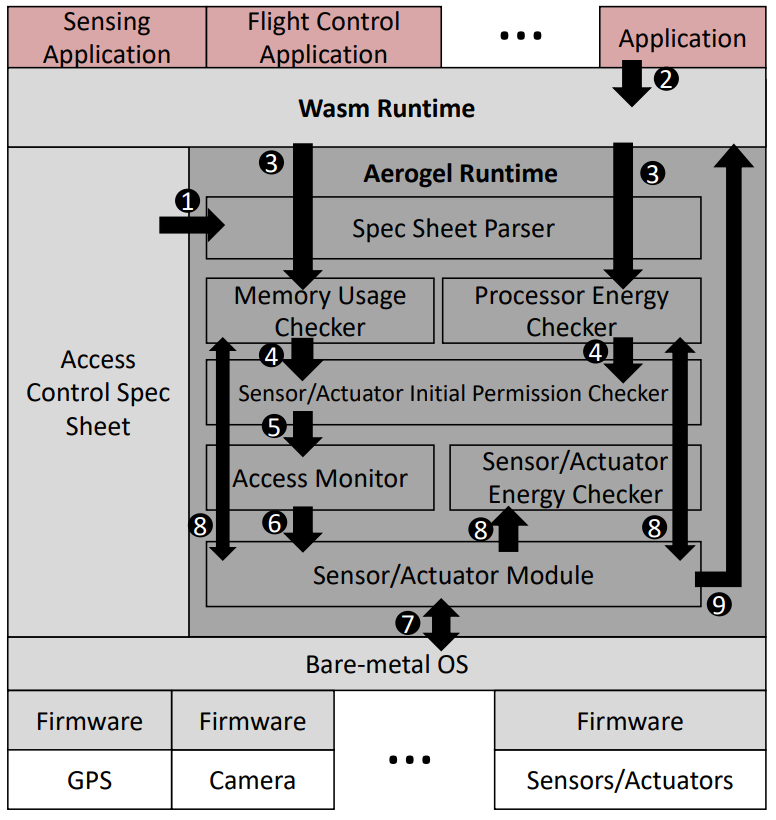

Application latency requirements, privacy, and security concerns have naturally pushed computing onto smartphone and IoT devices in a decentralized manner. In response to these demands, researchers have developed micro-runtimes for WebAssembly (Wasm) on IoT devices to enable streaming applications to a runtime that can run the target binaries that are independent of the device. However, the migration of Wasm and the associated security research has neglected the urgent needs of access control on bare-metal, memory management unit (MMU)-less IoT devices that are sensing and actuating upon the physical environment. This paper presents Aerogel, an access control framework that addresses security gaps between the bare-metal IoT devices and the Wasm execution environment concerning access control for sensors, actuators, processor energy usage, and memory usage.

Aerogel is a novel, lightweight access control framework designed for WebAssembly (Wasm)-based bare-metal IoT devices that lack a Memory Management Unit (MMU). It addresses the urgent need for fine-grained access control over sensors, actuators, processor energy, and memory usage in these multi-tenant environments. By leveraging Wasm’s inherent sandboxing capabilities, Aerogel effectively isolates applications and enforces user-defined policies without relying on the bare-metal operating system. Evaluations on an nRF52840 development board show that Aerogel introduces minimal runtime overhead (0.19% to 1.04%) and consumes low extra energy (18.8% to 45.9%), making it a practical solution for resource-constrained IoT systems.

CP11 is developing a smartphone-based recording application called the CAPTURE app that synchronizes invasive neural recordings with continuous audio-visual, accelerometry, GPS, subjective report, autonomic physiology, and wearable eye tracking recordings during real-world behaviors like autobiographical memory encoding.

This project aims to integrate wearable devices—smartphones capturing audio-visual, accelerometry, GPS, physiological, and eye-tracking data—with synchronized intracranial neural recordings to study autobiographical memory (AM) formation in real-world settings. AM, which is uniquely personal and complex, has been challenging to analyze due to the limitations of traditional neuroimaging. This study will investigate how the brain encodes, processes, and retrieves AM by tracking real-world behaviors, offering insights into cognitive and neural mechanisms that are compromised in disorders like Alzheimer’s. By developing the CAPTURE app to record multimodal data synchronized with invasive neural data during daily experiences, the research will establish a foundation for neuromodulation approaches to enhance memory in real-world contexts. The project will leverage the NeuroPace Responsive Neurostimulation System, implanted in over 2,000 epilepsy patients, as a unique opportunity to collect direct neural data associated with AM formation and potentially develop real-world memory restoration tools.

TR&D3 is pushing new methods for efficiently learning robust embedding representations from multimodal sensor data streams from wearables and intracranial recordings.

CP11 challenges TR&D3 to develop neural foundation models to detect spatiotemporal events.

CP11 will get deep learning based neural foundation models that can be utilized to create analytics pipelines for a variety of downstream tasks involving detection of spatiotemporal events as people move through real-world spaces for purposes of assisting in memory formation.

This project aims to unlock the potential of combining wearable mobile recording devices, such as smartphones with continuous audio-visual, accelerometry, GPS, subjective report, autonomic physiology, and wearable eye tracking recordings, with precisely synchronized intracranial neural recordings during real-world behaviors. Autobiographical memory (AM) formation is a critical human behavior that has been difficult to study with traditional neuroimaging methods. Thus the proposed project aims to develop a smartphone-based recording application (CAPTURE app; R61 phase) synchronized with wearables and invasive neural recordings during real-world behaviors like autobiographical memory encoding (R33 phase).

You must be logged in to post a comment.

No Comments