CP 1: Novel Use of mHealth Data to Identify States of Vulnerability and Receptivity to JITAIs

CP / Smoking Cessation / TR&D1 / TR&D2 / TR&D3

ACM on Interactive, Mobile, Wearable, and Ubiquitous Technologies

July 7, 2022

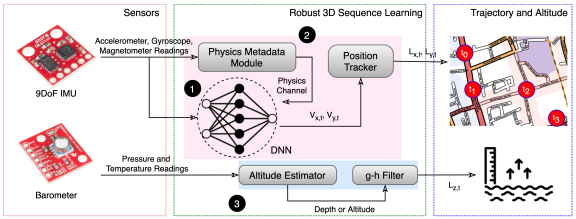

In this paper, we introduce TinyOdom, a framework for training and deploying neural inertial models on URC hardware.

ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies

July 7, 2022

earable, network architecture search, neural networks, machine learning, datasets, filters, human activity, head-pose, TinyML, optimization, hardware-in-the-loop

tinyML Summit

March 2022

neural networks, edge computing, IoT platforms, AI-based inference, TinyML, activity detection models

Machine Learning for Health (ML4H)

November 15, 2022

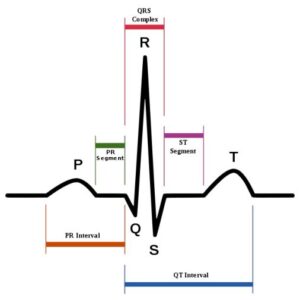

generative model, electrocardiogram, data augmentation

We present CardiacGen, a Deep Learning framework for generating synthetic but physiologically plausible cardiac signals like ECG. Based on the physiology of cardiovascular system function, we propose a modular hierarchical generative model and impose explicit regularizing constraints for training each module using multi-objective loss functions. The model comprises 2 modules, an HRV module focused on producing realistic Heart-Rate-Variability characteristics and a Morphology module focused on generating realistic signal morphologies for different modalities. We empirically show that in addition to having realistic physiological features, the synthetic data from CardiacGen can be used for data augmentation to improve the performance of Deep Learning based classifiers.

We present CardiacGen, a Deep Learning framework for generating synthetic but physiologically plausible cardiac signals like ECG.

ACM Symposium on User

Interface Software and Technology (2023)

October 29, 2023

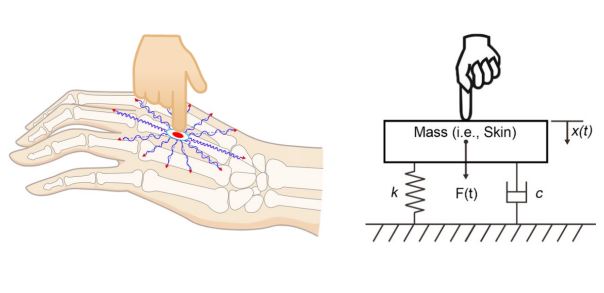

gesture recognition, finger interaction, vibration sensing, unsupervised adversarial training

Wearable devices like smartwatches and smart wristbands have gained substantial popularity in recent years. However, their small interfaces create inconvenience and limit computing functionality. To fill this gap, we propose ViWatch, which enables robust finger interactions under deployment variations, and relies on a single IMU sensor that is ubiquitous in COTS smartwatches. To this end, we design an unsupervised Siamese adversarial learning method. We built a real-time system on commodity smartwatches and tested it with over one hundred volunteers. Results show that the system accuracy is about 97% over a week. In addition, it is resistant to deployment variations such as different hand shapes, finger activity strengths, and smartwatch positions on the wrist. We also developed a number of mobile applications using our interactive system and conducted a user study where all participants preferred our unsupervised approach to supervised calibration. The demonstration of ViWatch is shown at https://youtu.be/N5-ggvy2qfI.

ACM Transactions on Embedded Computing Systems (2023)

May 31, 2023

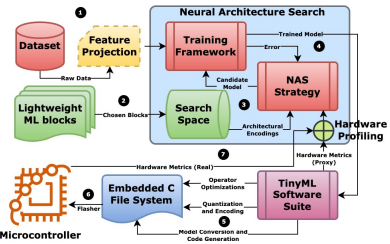

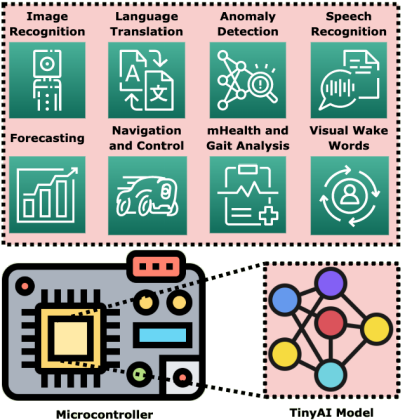

neurosymbolic, neural architecture search, TinyML, AutoML, bayesian, platform-aware

Machine learning at the extreme edge has enabled a plethora of intelligent, time-critical, and remote applications. However, deploying interpretable artificial intelligence systems that can perform high-level symbolic reasoning and satisfy the underlying system rules and physics within the tight platform resource constraints is challenging. In this paper, we introduce TinyNS, the first platform-aware neurosymbolic architecture search framework for joint optimization of symbolic and neural operators. TinyNS provides recipes and parsers to automatically write microcontroller code for five types of neurosymbolic models, combining the context awareness and integrity of symbolic techniques with the robustness and performance of machine learning models. TinyNS uses a fast, gradient-free, black-box Bayesian optimizer over discontinuous, conditional, numeric, and categorical search spaces to find the best synergy of symbolic code and neural networks within the hardware resource budget. To guarantee deployability, TinyNS talks to the target hardware during the optimization process. We showcase the utility of TinyNS by deploying microcontroller-class neurosymbolic models through several case studies. In all use cases, TinyNS outperforms purely neural or purely symbolic approaches while guaranteeing execution on real hardware.

We introduce TinyNS, the first platform-aware neurosymbolic architecture search framework for joint optimization of symbolic and neural operators.

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies

stressful conversations, stress detection, wearables, physiological sensors, intertial sensors,

December 2020

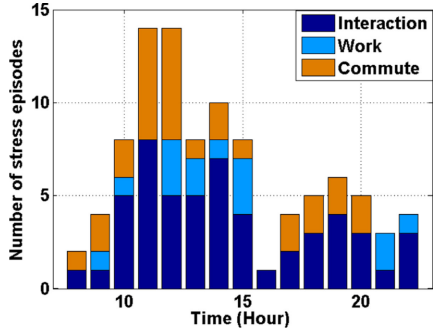

Stressful conversation is a frequently occurring stressor in our daily life. Stressors not only adversely affect our physical and mental health but also our relationships with family, friends, and coworkers. In this paper, we present a model to automatically detect stressful conversations using wearable physiological and inertial sensors. We conducted a lab and a field study with cohabiting couples to collect ecologically valid sensor data with temporally-precise labels of stressors. We introduce the concept of stress cycles, i.e., the physiological arousal and recovery, within a stress event. We identify several novel features from stress cycles and show that they exhibit distinguishing patterns during stressful conversations when compared to physiological response due to other stressors. We observe that hand gestures also show a distinct pattern when stress occurs due to stressful conversations. We train and test our model using field data collected from 38 participants. Our model can determine whether a detected stress event is due to a stressful conversation with an F1-score of 0.83, using features obtained from only one stress cycle, facilitating intervention delivery within 3.9 minutes since the start of a stressful conversation.

In this paper, we present a model to automatically detect stressful conversations using wearable physiological and intertial sensors.

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies

mHealth, brushing detection, flossing detection, hand-to-mouth gestures

June 2021

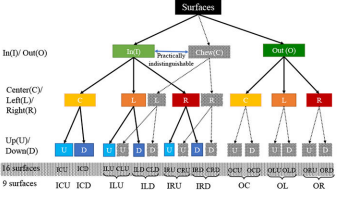

Ensuring that all the teeth surfaces are adequately covered during daily brushing can reduce the risk of several oral diseases. In this paper, we propose the mTeeth model to detect teeth surfaces being brushed with a manual toothbrush in the natural free-living environment using wrist-worn inertial sensors. To unambiguously label sensor data corresponding to different surfaces and capture all transitions that last only milliseconds, we present a lightweight method to detect the micro-event of brushing strokes that cleanly demarcates transitions among brushing surfaces. Using features extracted from brushing strokes, we propose a Bayesian Ensemble method that leverages the natural hierarchy among teeth surfaces and patterns of transition among them. For training and testing, we enrich a publicly-available wrist-worn inertial sensor dataset collected from the natural environment with time-synchronized precise labels of brushing surface timings and moments of transition. We annotate 10,230 instances of brushing on different surfaces from 114 episodes and evaluate the impact of wide between-person and within-person between-episode variability on machine learning model’s performance for brushing surface detection.

In this paper, we propose the mTeeth model to detect teeth surfaces being brushed with a manual toothbrush in the natural free-living environment using wrist-worn inertial sensors.

Proceedings of the 2024 CHI Conference on Human Factors in Computing Systems (CHI ’24)

May 11, 2024

stress-tracking, stressor-logging, visualizations, behavioral changes, stress intervention, emotion/affective computing, wearable sensors, field studies, empirical studies in HCI, longitudinal study, self-reflection, personal informatics, stress reduction, self-awareness, smartwatch apps, smartphone apps, physiological events, and daily stressors.

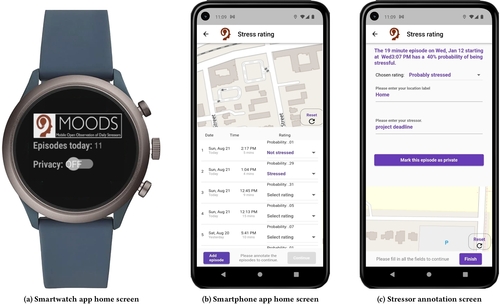

Commercial wearables from Fitbit, Garmin, and Whoop have recently introduced real-time notifications based on detecting changes in physiological responses indicating potential stress. In this paper, we investigate how these new capabilities can be leveraged to improve stress management. We developed a smartwatch app, a smartphone app, and a cloud service, and conducted a 100-day field study with 122 participants who received prompts triggered by physiological responses several times a day. They were asked whether they were stressed, and if so, to log the most likely stressor. Each week, participants received new visualizations of their data to self-reflect on patterns and trends. Participants reported better awareness of their stressors, and self-initiating fourteen kinds of behavioral changes to reduce stress in their daily lives. Repeated self-reports over 14 weeks showed reductions in both stress intensity (in 26,521 momentary ratings) and stress frequency (in 1,057 weekly surveys).

A 100-day field study (MOODS) explored how wearables can enhance stress management by combining real-time physiological stress detection with momentary stressor logging and weekly self-reflective visualizations. The study found significant reductions in self-reported stress intensity and frequency, fostering greater self-awareness of stressors and prompting participants to make 14 types of self-initiated behavioral changes for improved emotional regulation, productivity, and self-care. This work highlights the potential of personal informatics systems in driving lasting stress reduction through user engagement and data insights.

Nassal, M. Sugavanam, N., Aramendi, E., Jaureguibeitia, X., Elola, A., Panchal, A., Ulintz, A., Wang, H., Ertin, E.

Proceedings of the 57th Annual Hawaii International Conference on System Sciences, HICSS 2024

January 3, 2024

Artificial Intelligence (AI), cardiac arrest, resuscitation, end tidal capnography, reinforcement learning

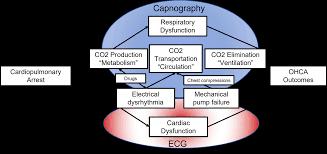

Artificial Intelligence (AI) and machine learning have advanced healthcare by defining relationships in complex conditions. Out-of-hospital cardiac arrest (OHCA) is a medically complex condition with several etiologies. Survival for OHCA has remained static at 10% for decades in the United States. Treatment of OHCA requires the coordination of numerous interventions, including the delivery of multiple medications. Current resuscitation algorithms follow a single strict pathway, regardless of fluctuating cardiac physiology. OHCA resuscitation requires a real-time biomarker that can guide interventions to improve outcomes. End tidal capnography (ETCO2) is commonly implemented by emergency medical services professionals in resuscitation and can serve as an ideal biomarker for resuscitation. However, there are no effective conceptual frameworks utilizing the continuous ETCO2 data. In this manuscript, we detail a conceptual framework using AI and machine learning techniques to leverage ETCO2 in guided resuscitation.

This publication proposes a conceptual framework for utilizing Artificial Intelligence (AI) and machine learning to create End Tidal Capnography (ETCO2) guided resuscitation for Out-of-Hospital Cardiac Arrest (OHCA). The aim is to move beyond rigid, fixed-interval resuscitation algorithms by leveraging continuous ETCO2 data as a real-time biomarker, alongside other physiological measurements, to develop personalized, dynamic interventions that are responsive to a patient’s evolving cardiac physiology. This approach seeks to improve the currently static survival rates for OHCA by enabling a deeper analysis of ETCO2 trends in relation to patient characteristics and interventions, potentially revealing “hidden” patterns and allowing for reward-based algorithms to guide optimal treatment strategies.

IEEE Transactions on Signal Processing

March 3, 2022

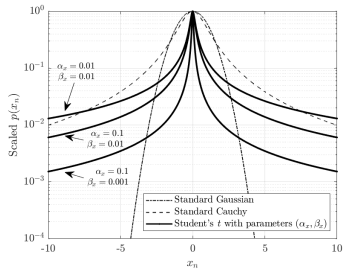

Bayesian estimation methods for sparse blind deconvolution problems conventionally employ Bernoulli-Gaussian (BG) prior for modeling sparse sequences and utilize Markov Chain Monte Carlo (MCMC) methods for the estimation of unknowns. However, the discrete nature of the BG model creates computational bottlenecks, preventing efficient exploration of the probability space even with the recently proposed enhanced sampler schemes. To address this issue, we propose an alternative MCMC method by modeling the sparse sequences using the Normal-Inverse-Gamma (NIG) prior. We derive effective Gibbs samplers for this prior and illustrate that the computational burden associated with the BG model can be eliminated by transferring the problem into a completely continuous-valued framework. In addition to sparsity, we also incorporate time and frequency domain constraints on the convolving sequences. We demonstrate the effectiveness of the proposed methods via extensive simulations and characterize computational gains relative to the existing methods that utilize BG modeling.

IEEE Sensors Journal

November 15, 2022

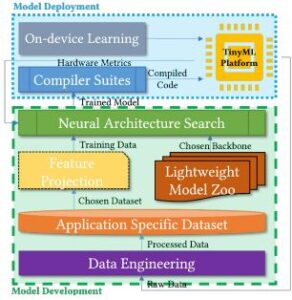

feature projection, Internet of Things, machine learning, microcontrollers, model compression, neural architecture search, neural networks, optimization, sensors, TinyML

This paper highlights the unique requirements of enabling onboard machine learning for microcontroller class devices. We characterize a closed-loopwidely applicable workflow of ML model development for microcontroller-class devices and show that several classes of applications adopt a specific instance of it.

2023 IEEE International Symposium on Antennas and Propagation and USNC-URSI Radio Science Meeting (AP-S/URSI). pp. 53–54.

July 23-28, 2023

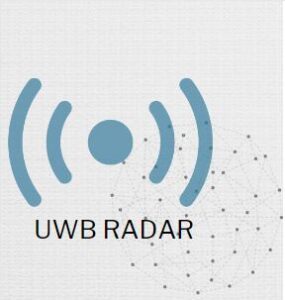

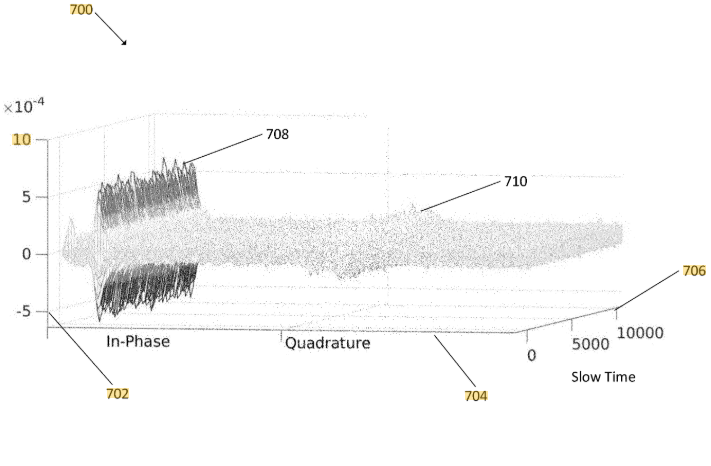

antenna measurements, three-dimensional displays, neural networks, transfer functions, radar antennas, nonhomogeneous media, ultra wideband antennas

Ultrawideband (UWB) radar sensors are an emerging biosensing modality that can be used to assess the dielectric properties of internal tissues. Antenna effects, including antenna body interactions limit the sensors ability to isolate the weak returns from the internal tissues. In this paper we develop a data driven calibration method for recovering Green’s function of the multilayered media model of the tissue profiles using an Invertible Neural Network (INN). The proposed INN structure is trained to invert the antenna transfer function to form estimates of the Green’s function modeling returns from internal tissues. We use simulation experiments to assess the effectiveness of the trained INN in antenna transfer function inversion.

In this paper we develop a data driven calibration method for recovering Green’s function of the multilayered media model of the tissue profiles using an Invertible Neural Network (INN).

Sensors (Basel)

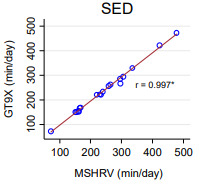

MotionSense HRV, accelerometer, mobile health, physical activity, sedentary behavior

February 18, 2021

MotionSense HRV is a wrist-worn accelerometery-based sensor that is paired with a smartphone and is thus capable of measuring the intensity, duration, and frequency of physical activity (PA). However, little information is available on the validity of the MotionSense HRV. Therefore, the purpose of this study was to assess the concurrent validity of the MotionSense HRV in estimating sedentary behavior (SED) and PA. A total of 20 healthy adults (age: 32.5 ± 15.1 years) wore the MotionSense HRV and ActiGraph GT9X accelerometer (GT9X) on their non-dominant wrist for seven consecutive days during free-living conditions. Raw acceleration data from the devices were summarized into average time (min/day) spent in SED and moderate-to-vigorous PA (MVPA). Additionally, using the Cosemed K5 indirect calorimetry system (K5) as a criterion measure, the validity of the MotionSense HRV was examined in simulated free-living conditions. Pearson correlations, mean absolute percent errors (MAPE), Bland-Altman (BA) plots, and equivalence tests were used to examine the validity of the MotionSense HRV against criterion measures. The correlations between the MotionSense HRV and GT9X were high and the MAPE were low for both the SED (r = 0.99, MAPE = 2.4%) and MVPA (r = 0.97, MAPE = 9.1%) estimates under free-living conditions. BA plots illustrated that there was no systematic bias between the MotionSense HRV and criterion measures. The estimates of SED and MVPA from the MotionSense HRV were significantly equivalent to those from the GT9X; the equivalence zones were set at 16.5% for SED and 29% for MVPA. The estimates of SED and PA from the MotionSense HRV were less comparable when compared with those from the K5. The MotionSense HRV yielded comparable estimates for SED and PA when compared with the GT9X accelerometer under free-living conditions. We confirmed the promising application of the MotionSense HRV for monitoring PA patterns for practical and research purposes.

The purpose of this study was to assess the concurrent validity of the MotionSense HRV in estimating sedentary behavior (SED) and PA.

Proceedings of the 30th Annual International Conference on Mobile Computing and Networking

December 4, 2024

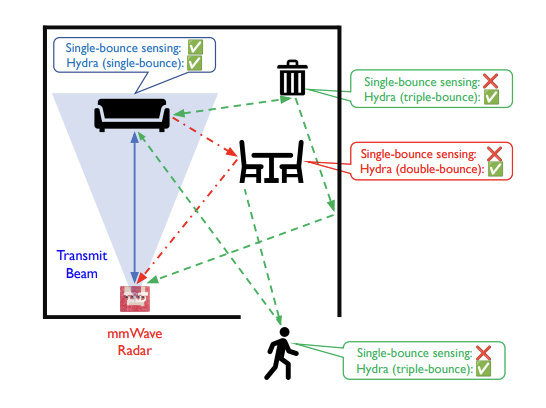

millimeter-wave, multi-bounce scattering, radar, sensing, beyond-field-of-view, localization.

In this paper, we ask, “Can millimeter-wave (mmWave) radars sense objects not directly illuminated by the radar – for instance, objects located outside the transmit beamwidth, behind occlusions, or placed fully behind the radar?” Traditionally, mmWave radars are limited to sense objects that are directly illuminated by the radar and scatter its signals directly back. In practice, however, radar signals scatter to other intermediate objects in the environment and undergo multiple bounces before being received back at the radar. In this paper, we present Hydra, a framework to explicitly model and exploit multi-bounce paths for sensing. Hydra enables standalone mmWave radars to sense beyond-field-of-view objects without prior knowledge of the environment. We extensively evaluate the localization performance of Hydra with an off-the-shelf mmWave radar in five different environments with everyday objects.

“Hydra” is a novel framework that significantly enhances millimeter-wave (mmWave) radar capabilities by exploiting multi-bounce scattering to sense objects located beyond the radar’s direct field-of-view (FoV). This includes objects that are not directly illuminated, are behind occlusions, or are even completely behind the radar unit. Unlike traditional single-bounce radar methods, Hydra operates without requiring prior knowledge of the environment or additional hardware. It employs a sequential detection and localization process, using earlier single-bounce detections as anchors to find objects via double and triple bounces. Tested on a commercial mmWave MIMO radar, Hydra demonstrates a 2× to 10× improvement in median beyond-FoV localization error for human targets compared to conventional single-bounce techniques.

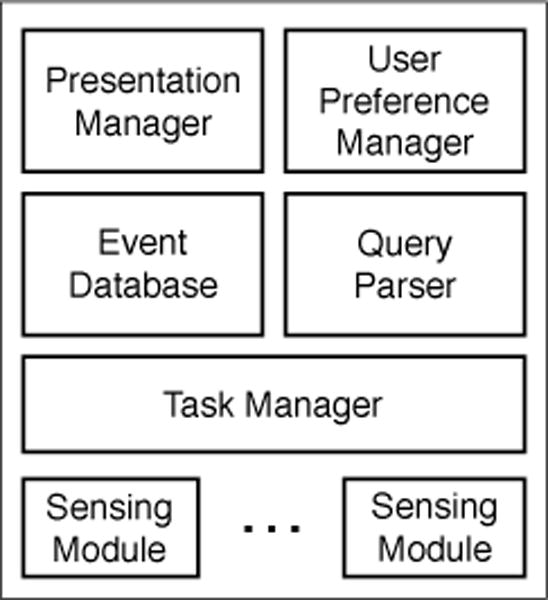

Bo-Jhang Ho, Nima Nikzad, Bharathan Balaji, Mani Srivastava

Proceedings of the 2017 ACM International Joint Conference on Pervasive and Ubiquitous Computing

Proceedings of the 2017 ACM International Symposium on Wearable Computers

December 2017

Context-aware, mobile

applications, engagement,

just-in-time assessment, push notifications, user studies, mhealth, framework

Mobile technologies that drive just-in-time ecological momentary assessments and interventions provide an un-precedented view into user behaviors and opportunities to manage chronic conditions. The success of these meth-ods rely on engaging the user at the appropriate moment, so as to maximize questionnaire and task completion rates. However, mobile operating systems provide little support to precisely specify the contextual conditions in which to notify and engage the user, and study designers often lack the ex-pertise to build context-aware software themselves. To ad-dress this problem, we have developed Emu, a framework that eases the development of context-aware study appli-cations by providing a concise and powerful interface for specifying temporal- and contextual-constraints for task no-tifications. In this paper we present the design of the Emu API and demonstrate its use in capturing a range of scenar-ios common to smartphone-based study applications.

Emu is a framework designed to simplify the development of context-aware mobile study applications. It provides a concise and powerful interface for specifying temporal and contextual constraints for task notifications, addressing the challenge that mobile operating systems offer limited support for precisely engaging users at appropriate moments. By automating the tracking of contextual states and user responses, Emu maximizes questionnaire and task completion rates in studies, helping to manage chronic conditions and improve study protocol adherence. It significantly reduces code complexity for developers compared to native implementations.

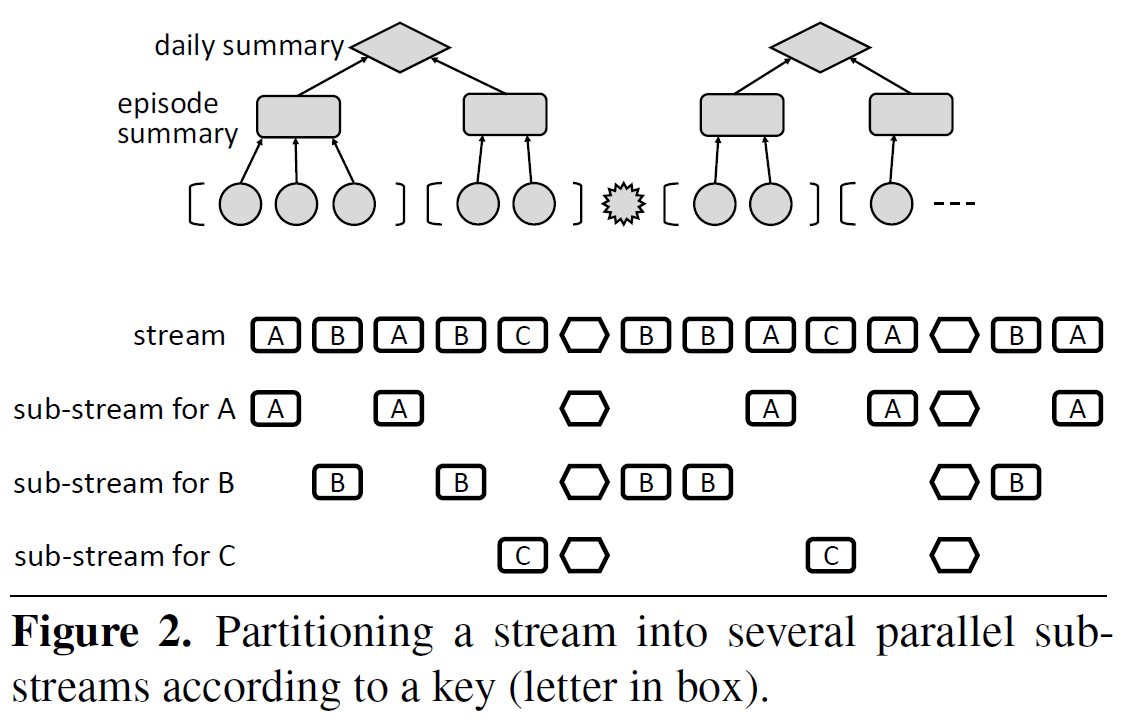

Konstantinos Mamouras, Mukund Raghothaman, Rajeev Alur, Zachary G. Ives, Sanjeev Khanna

Proceedings of the 38th ACM SIGPLAN Conference on Programming Language Design and Implementation

December 2017

Data stream processing, Quantitative Regular

Expressions, IoT applications, real-time decision making, relational query languages, regular expressions, modular specification, efficient

evaluation, streaming composition, key-based

partitioning, pattern-based windows, approximate aggregation.

Real-time decision making in emerging IoT applications typ-ically relies on computing quantitative summaries of largedata streams in an efficient and incremental manner. To sim-plify the task of programming the desired logic, we proposeStreamQRE, which provides natural and high-level constructsfor processing streaming data. Our language has a novel in-tegration of linguistic constructs from two distinct program-ming paradigms: streaming extensions of relational querylanguages and quantitative extensions of regular expressions.The former allows the programmer to employ relational con-structs to partition the input data by keys and to integrate datastreams from different sources, while the latter can be used toexploit the logical hierarchy in the input stream for modularspecifications.We first present the core language with a small set ofcombinators, formal semantics, and a decidable type system.We then show how to express a number of common patternswith illustrative examples. Our compilation algorithm trans-lates the high-level query into a streaming algorithm withprecise complexity bounds on per-item processing time andtotal memory footprint. We also show how to integrate ap-proximation algorithms into our framework. We report onan implementation in Java, and evaluate it with respect toexisting high-performance engines for processing streamingdata. Our experimental evaluation shows that (1) StreamQREallows more natural and succinct specification of queriescompared to existing frameworks, (2) the throughput of ourimplementation is higher than comparable systems (for ex-ample, two-to-four times greater than RxJava), and (3) theapproximation algorithms supported by our implementationcan lead to substantial memory savings.

StreamQRE is a novel programming language designed for real-time decision-making in IoT applications by integrating two powerful paradigms: streaming extensions of relational query languages and quantitative extensions of regular expressions. This allows for natural and modular specification of complex queries over large data streams. Its compiler translates high-level queries into efficient streaming algorithms with guaranteed low memory footprint and fast processing times, outperforming existing high-performance streaming engines like RxJava, Esper, and Flink. StreamQRE also supports approximation algorithms for significant memory savings on computationally intensive tasks like median calculation.

Proceedings of the 2017 Workshop on Wearable Systems and Applications

June 19, 2017

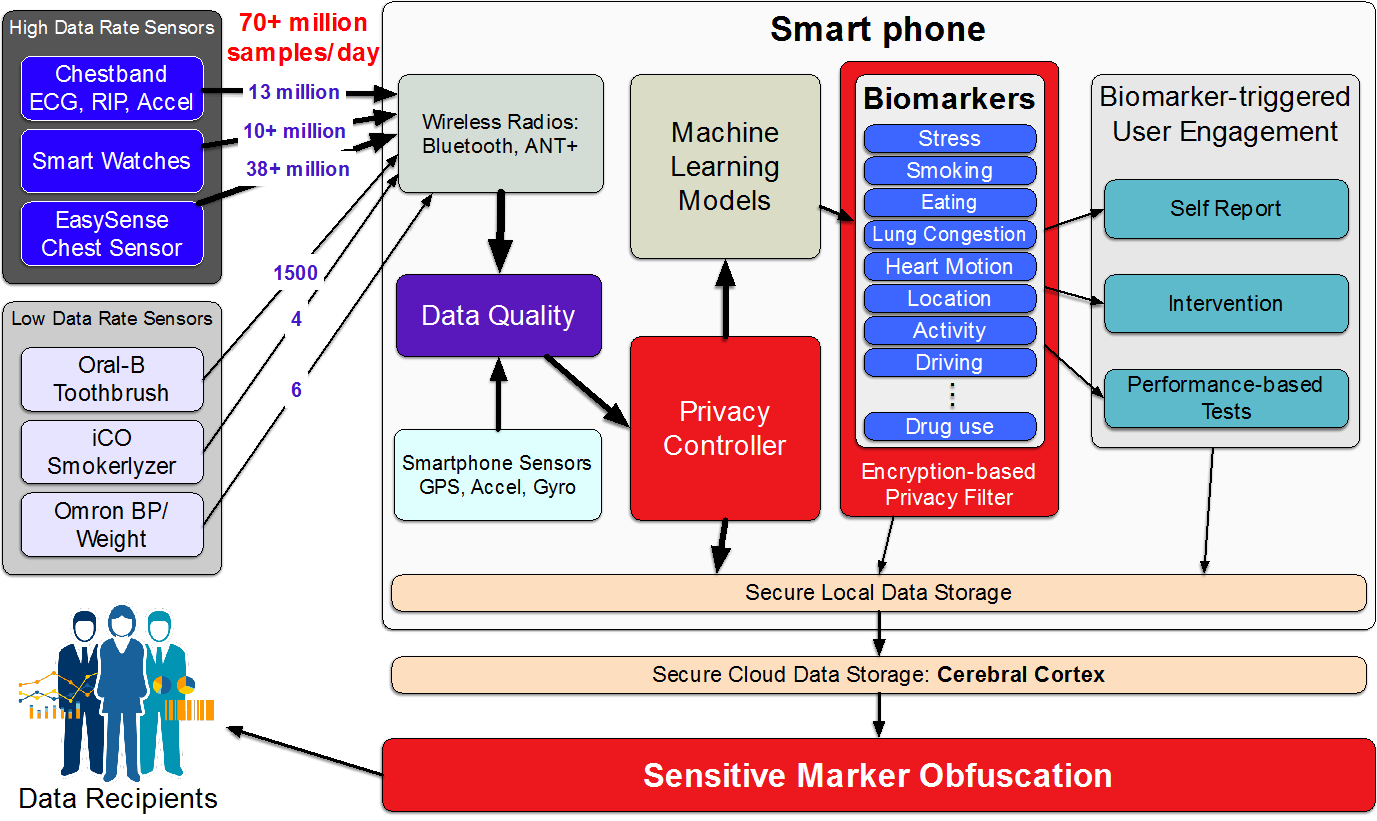

Mobile sensors can track human states, the surrounding context, daily behaviors, and exposures to environmental risk factors in the natural field environment. Real-time analysis of such sensor data makes it possible to deliver personalized recommendations to improve health, wellness, and performance. Widely used GPS-navigation systems that provide just-in-time directions for traffic-aware navigation and activity trackers that help users set and achieve daily physical activity goals are widely used early examples. The increasing availability of mobile sensors that allow collection of raw sensor data, along with mobile big data software platforms that allow labeled collection, curation, modeling, and visualization of such data for development and validation of new markers and sensor-triggered interventions, is opening up exciting new research directions. They include novel sensor systems for selftracking of health, wellness, and performance.

Syed Monowar Hossain, Timothy Hnat, Nazir Saleheen, Nusrat Jahan Nasrin, Joseph Noor, Bo-Jhang Ho, Tyson Condie, Mani Srivastava, Santosh Kumar

Proceedings of the 15th ACM Conference on Embedded Network Sensor Systems

November 6, 2017

mHealth, mobile sensor big data, software architecture, digital biomarkers, wearable sensors

The development and validation studies of new multisensory biomarkers and sensor-triggered interventions requires collecting raw sensor data with associated labels in the natural field environment. Unlike platforms for traditional mHealth apps, a software platform for such studies needs to not only support high-rate data ingestion, but also share raw high-rate sensor data with researchers, while supporting high-rate sense-analyze-act functionality in real-time. We present mCerebrum, a realization of such a platform, which supports high-rate data collections from multiple sensors with realtime assessment of data quality. A scalable storage architecture (with near optimal performance) ensures quick response despite rapidly growing data volume. Micro-batching and efficient sharing of data among multiple source and sink apps allows reuse of computations to enable real-time computation of multiple biomarkers …

mCerebrum is an open-source mobile sensing software platform designed for the development and validation of digital biomarkers and sensor-triggered interventions. Unlike traditional mHealth apps, it’s built to handle high-rate raw sensor data collection in natural environments, supporting over 70 million samples per day. The platform features a scalable storage architecture (Pebbles) with near-optimal performance, an efficient data exchange architecture (DataKit) for real-time biomarker computation, and a reconfigurable, burden- and context-aware scheduler for participant prompts. With a modular design spanning over 23 apps, mCerebrum has evolved through its use in scientific field studies at ten sites, accumulating 106,806 person-days of data. Evaluations show it significantly outperforms other platforms like AWARE, HealthKit, and Google Fit in data rates, storage throughput, and CPU usage, enabling efficient processing of multi-sensor biomarkers.

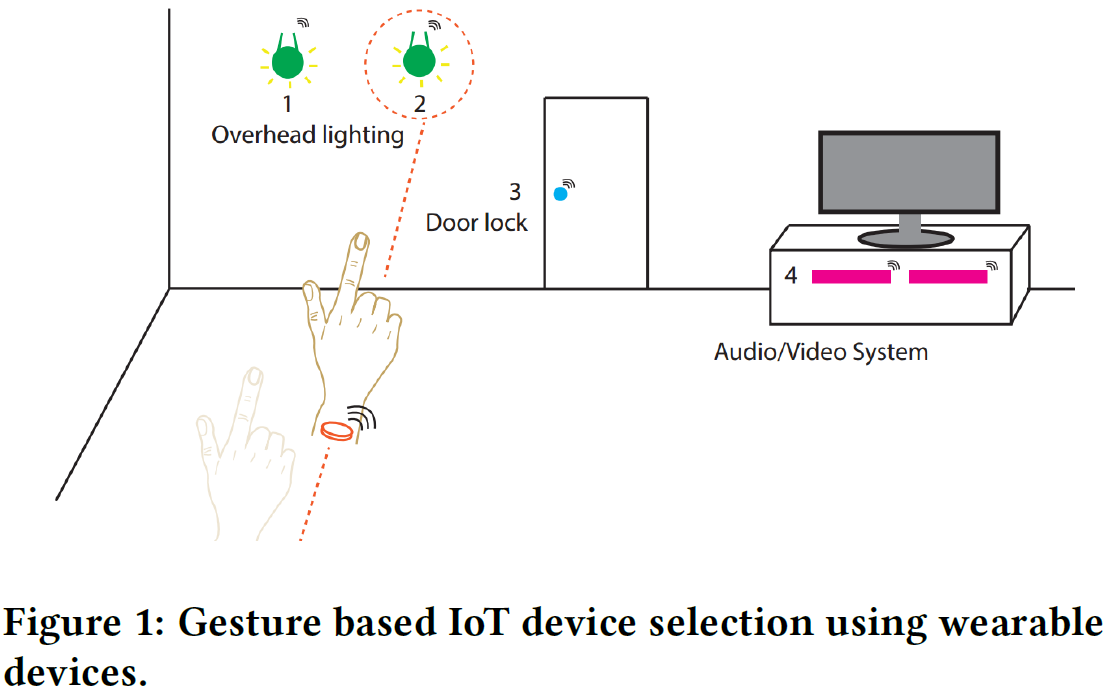

Amr Alanwar, Moustafa Alzantot, Bo-Jhang Ho, Paul Martin, Mani Srivastava

Proceedings of the Second International Conference on Internet-of-Things Design and Implementation

April 2017

Pointing, IoT, hand gestures, smartwatch, UWB, device selection, smart home, human-computer interaction (HCI), gesture recognition, wearable devices.

Although different interaction modalities have been proposed in the field of human-computer interface (HCI), only a few of these techniques could reach the end users because of scalability and usability issues. Given the popularity and the growing number of IoT devices, selecting one out of many devices becomes a hurdle in a typical smarthome environment. Therefore, an easy-to-learn, scalable, and non-intrusive interaction modality has to be explored. In this paper, we propose a pointing approach to interact with devices, as pointing is arguably a natural way for device selection. We introduce SeleCon for device selection and control which uses an ultra-wideband (UWB) equipped smartwatch. To interact with a device in our system, people can point to the device to select it then draw a hand gesture in the air to specify a control action. To this end, SeleCon employs inertial sensors for pointing gesture detection and a UWB transceiver for identifying the selected device from ranging measurements. Furthermore, SeleCon supports an alphabet of gestures that can be used for controlling the selected devices. We performed our experiment in a 9m-by-10m lab space with eight deployed devices. The results demonstrate that SeleCon can achieve 84.5% accuracy for device selection and 97% accuracy for hand gesture recognition. We also show that SeleCon is power efficient to sustain daily use by turning off the UWB transceiver, when a user’s wrist is stationary.

SeleCon is a novel system that enables natural and scalable interaction with Internet of Things (IoT) devices in smart homes using pointing gestures and hand gestures. Users wear a custom smartwatch equipped with Ultra-Wideband (UWB) and inertial sensors. By pointing to a device, the system identifies the target using UWB ranging data, and then hand gestures drawn in the air control the selected device. SeleCon prioritizes energy efficiency by only activating the power-hungry UWB when wrist motion indicates a potential pointing action. The system demonstrates high accuracy: 84.5% for device selection and 97% for hand gesture recognition.

Iris Nguyen, Liying Han, Burke Dambly, Alireza Kazemi, Marina Kogan, Cory Inman, Mani Srivastava, Luis Garcia

Proceedings of the 23rd ACM Conference on Embedded Networked Sensor Systems

May 6, 2025

multimodal foundation models, embodied artificial intelligence

Moustafa Alzantot, Luis Garcia, Mani Srivastava

April 25, 2022

Physiological sensor

readings, generative

models, GANs, VAEs,

time-series data,

ECG classification, human activity recognition,

synthetic data generation, data imputation, mode collapse mitigation.

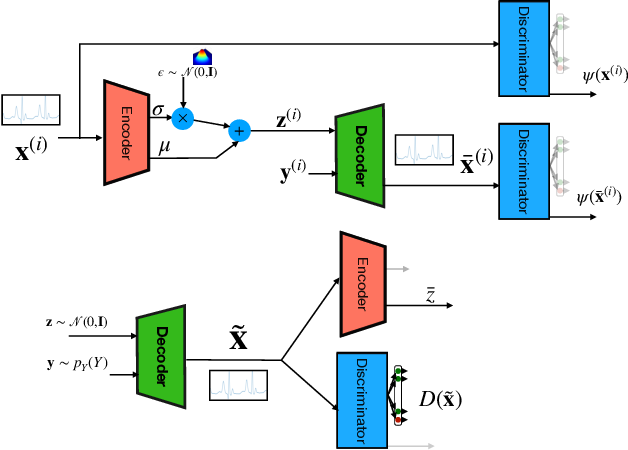

Generative models such as the variational autoencoder (VAE) and the generative adversarial networks (GAN) have proven to be incredibly powerful for the generation of synthetic data that preserves statistical properties and utility of real-world datasets, especially in the context of image and natural language text. Nevertheless, until now, there has no successful demonstration of how to apply either method for generating useful physiological sensory data. The state-of-the-art techniques in this context have achieved only limited success. We present PHYSIOGAN, a generative model to produce high fidelity synthetic physiological sensor data readings. PHYSIOGAN consists of an encoder, decoder, and a discriminator. We evaluate PHYSIOGAN against the state-of-the-art techniques using two different real-world datasets: ECG classification and activity recognition from motion sensors datasets. We compare PHYSIOGAN to the baseline models not only the accuracy of class conditional generation but also the sample diversity and sample novelty of the synthetic datasets. We prove that PHYSIOGAN generates samples with higher utility than other generative models by showing that classification models trained on only synthetic data generated by PHYSIOGAN have only 10% and 20% decrease in their classification accuracy relative to classification models trained on the real data. Furthermore, we demonstrate the use of PHYSIOGAN for sensor data imputation in creating plausible results.

PHYSIOGAN is a novel generative model that successfully produces high-fidelity, diverse, and novel synthetic physiological sensor data by combining variational autoencoders (VAEs) and generative adversarial networks (GANs). It significantly outperforms state-of-the-art techniques for conditional generation, proving its utility for downstream tasks like training classification models with only a 10% to 20% decrease in accuracy compared to real data, and effectively imputing missing sensor readings.

International Conference on Learning Representations (ICLR)

November 13, 2021

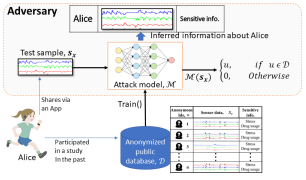

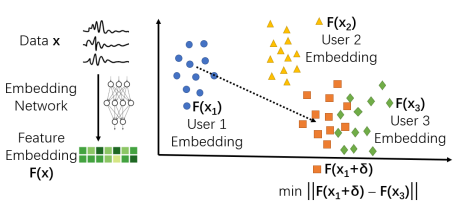

privacy, user re-identification, wrist-worn accelerometers

We characterize the re-identification risks inherent in motion sensor data collected from wrist-worn devices in users’ natural environment. We use an open-set formulation, train a deep learning architecture with a new loss function, and apply our model to a new data set.

IEEE/ACM Symposium on Edge Computing (SEC)

December 14, 2021

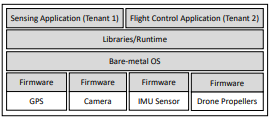

software security engineering, mobile platform security

Application latency requirements, privacy, and security concerns have naturally pushed computing onto smartphone and IoT devices in a decentralized manner. In response to these demands, researchers have developed micro-runtimes for WebAssembly (Wasm) on IoT devices to enable streaming applications to a runtime that can run the target binaries that are independent of the device. However, the migration of Wasm and the associated security research has neglected the urgent needs of access control on bare-metal, memory management unit (MMU)-less IoT devices that are sensing and actuating upon the physical environment. This paper presents Aerogel, an access control framework that addresses security gaps between the bare-metal IoT devices and the Wasm execution environment concerning access control for sensors, actuators, processor energy usage, and memory usage. In particular, we treat the runtime as a multi-tenant environment, where each Wasm-based application is a tenant. We leverage the inherent sandboxing mechanisms of Wasm to enforce the access control policies to sensors and actuators without trusting the bare-metal operating system. We evaluate our approach on a representative IoT development board: a cortexM4 based development board (nRF52840). Our results show that Aerogel can effectively enforce compute resource and peripheral access control policies while introducing as little as 0.19% to 1.04% runtime overhead and consuming only 18.8% to 45.9% extra energy.

This paper presents Aerogel, an access control framework that addresses security gaps between the bare-metal IoT devices and the Wasm execution environment concerning access control for sensors, actuators, processor energy usage, and memory usage.

Proceedings of the 20th International Conference on Information Processing in Sensor Networks, Pages 386-387

privacy, computing methodologies, machine learning, neural networks

May 18, 2021

The increased availability of on-body sensors gives researchers access to rich time-series data, many of which are related to human health conditions. Sharing such data can allow cross-institutional collaborations that create advanced data-driven models to make inferences on human well-being. However, such data are usually considered privacy-sensitive, and publicly sharing this data may incur significant privacy concerns. In this work, we seek to protect clinical time-series data against membership inference attacks, while maximally retaining the data utility. We achieve this by adding an imperceptible noise to the raw data. Known as adversarial perturbations, the noise is specially trained to force a deep learning model to make inference mistakes (in our case, mispredicting user identities). Our preliminary results show that our solution can better protect the data from membership inference attacks than the baselines, while succeeding in all the designed data quality checks.

In this work, we seek to protect clinical time-series data against membership inference attacks, while maximally retaining the data utility.

Brian Wang, Luis Garcia, Mani Srivastava

2024 IEEE Security and Privacy Workshops (SPW)

May 1, 2024

Large Language Models (LLM), Privacy, Contextual Integrity,

Smart Environments

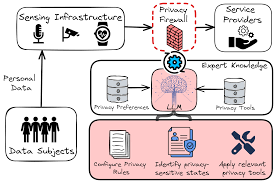

Modern smart buildings and environments rely on sensory infrastructure to capture and process information about their inhabitants. However, it remains challenging to ensure that this infrastructure complies with privacy norms, preferences, and regulations; individuals occupying smart environments are often occupied with their tasks, lack awareness of the surrounding sensing mechanisms, and are non-technical experts. This problem is only exacerbated by the increasing number of sensors being deployed in these environments, as well as services seeking to use their sensory data. As a result, individuals face an unmanageable number of privacy decisions, preventing them from effectively behaving as their own “privacy firewall” for filtering and managing the multitude of personal information flows. These decisions often require qualitative reasoning over privacy regulations, understanding privacy-sensitive contexts.

This paper introduces PrivacyOracle, a prototype system that leverages Large Language Models (LLMs) to automatically configure privacy firewalls in smart built environments. The system addresses the challenge of managing numerous privacy decisions for individuals in sensor-rich spaces by enabling automated decision-making regarding personal data flows. PrivacyOracle achieves this by performing qualitative reasoning over privacy regulations and social norms, identifying privacy-sensitive states from sensor data, and selecting appropriate data transformation tools. Evaluations show high accuracy in identifying sensitive states (up to 98%) and moderate agreement (75%) with social acceptability norms for information flows.

Ziqi Wang, Mani Srivastava, Akash Deep Singh, Luis Garcia, Zhe Chen, Jun Luo

United States Patent Application 20230288549

September 14, 2023

Ultrawideband Audio

Sensing, Impulse Radio Ultra-Wideband (IR-UWB), wireless vibrometry, sound source separation, Time-of-Flight (ToF), RF sensing, through-wall detection, static clutter suppression,

vibrating activity

localization, audio recover

Systems and methods for simultaneously recovering and separate sounds from multiple sources using Impulse Radio Ultra-Wideband (IR-UWB) signals are described. In one embodiment, a device can be configured for generating an audio signal based on audio source ranging using ultrawideband signals. In an embodiment the device includes, a transmitter circuitry, a receiver circuitry, memory and a processor. The processor configured to generate a radio signal. The radio signal including an ultra-wideband Gaussian pulse modulated on a radio-frequency carrier. The processor further configured to transmit the radio signal using the transmitter circuitry, receive one or more backscattered signals at the receiver circuitry, demodulate the one or more backscattered signals to generate one or more baseband signals, and generate a set of data frames based on the one or more baseband signals.

This publication introduces systems and methods for Ultrawideband Audio Sensing that leverage Impulse Radio Ultra-Wideband (IR-UWB) signals to simultaneously recover and separate sounds from multiple sources. Unlike traditional microphones that blend sounds and struggle with background noise, this wireless vibrometry approach senses sound directly from source vibrations using RF signals. Key advantages include the ability to penetrate building materials for non-line-of-sight (NLOS) operation, immunity to non-target noise, and fine-grained sound source separation based on Time-of-Flight (ToF) ranging, even for sources as close as 25 cm apart. The system employs a signal processing pipeline involving phase noise correction, static clutter suppression, vibrating activity localization, and denoising to achieve robust audio recovery.

TinyOdom: Hardware-Aware Efficient Neural Inertial Navigation

https://github.com/nesl/tinyodom

9 forks.

54 stars.

0 open issues.

Recent commits:

ACM on Interactive, Mobile, Wearable, and Ubiquitous Technologies

July 7, 2022

C++

C

Jupyter Notebook

Python

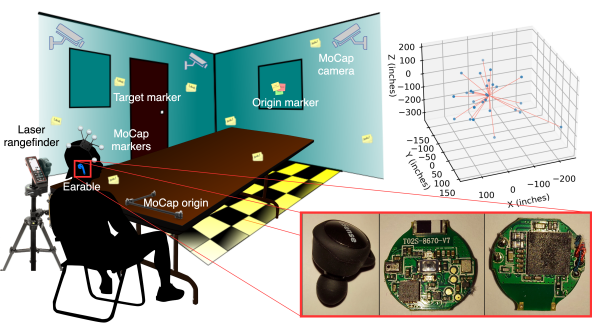

Auritus: An Open-Source Optimization Toolkit for Training and Development of Human Movement Models and Filters Using Earables

https://github.com/nesl/auritus

0 forks.

22 stars.

0 open issues.

Recent commits:

ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies

July 7, 2022

C++

C

Python

MATLAB

Jupyter Notebook

https://github.com/ARM-software/mango/tree/main/examples/THIN-Bayes

tinyML Summit

March 2022

Python

A hierarchical generative model for biological signals (PPG,ECG etc.) that keeps the physiological characteristics intact.

https://github.com/SenSE-Lab-OSU/cardiac_gen_model

0 forks.

4 stars.

0 open issues.

Recent commits:

Machine Learning for Health (ML4H)

November 15, 2022

Jupyter Notebook

Python

Shell

TinyNS: Platform-Aware Neurosymbolic Auto Tiny Machine Learning

https://github.com/nesl/neurosymbolic-tinyml

0 forks.

23 stars.

0 open issues.

Recent commits:

ACM Transactions on Embedded Computing Systems (2023)

May 31, 2023

C

C++

Assembly

Python

CMake

Makefile

https://github.com/burakcivek/BayesianSparseBlindDeconvolution

0 forks.

2 stars.

0 open issues.

Recent commits:

IEEE Transactions on Signal Processing

March 3, 2022

MATLAB

https://github.com/SenSE-Lab-OSU/MotionSenseHRV_v3

1 forks.

3 stars.

1 open issues.

Recent commits:

Sensors (Basel)

February 18, 2021

Jupyter Notebook

Python

Shell

International Conference on Learning Representations (ICLR)

November 13, 2021

Jupyter Notebook

The Auritus dataset has 2.43 million inertial samples related to head and full-body movements, consisting of 34 head poses and 9 activities from 45 volunteers. There are three main folders in the dataset, namely Activity Detection, Simple Head Pose and Complex Head Pose. It also contains physiological information about participants in the file Participant_Info.csv, target information for simple and complex head pose in the file HP_target_info.csv, and the IRB approval in the file IRB_Approval.pdf. Simple head pose corresponds to head movements from a origin marker to a target marker and back. Complex head pose corresponds to head movements from a origin marker to target marker A, target marker A to target marker B, and target marker B to origin.

ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies

July 7, 2022

Senior ML Researcher

Sr. Hardware Engineer

Algorithm Dev. Engineer

Applied Scientist

TR&D3 Lead

Lead PI, Center Director, TR&D1, TR&D2, TR&D3

Co-I, TR&D3

TR&D3 will make it possible for temporally-precise mHealth interventions for maintaining health and managing the growing burden of chronic diseases, to be realized on participants’ personal devices with minimal impact on battery life so as not to interfere with routine usage of these devices.