The mDOT Center

Transforming health and wellness via temporally-precise mHealth interventions

mDOT@MD2K.org

901.678.1526

901.678.1526

Collaborating Investigator:

Dr. Vivek Shetty; University of California, Los Angeles

Funding Status:

NIH/NIDCR/HHS

4/1/19 – 3/31/27

Associated with:

Nassal, M. Sugavanam, N., Aramendi, E., Jaureguibeitia, X., Elola, A., Panchal, A., Ulintz, A., Wang, H., Ertin, E.

Proceedings of the 57th Annual Hawaii International Conference on System Sciences, HICSS 2024

January 3, 2024

Artificial Intelligence (AI), cardiac arrest, resuscitation, end tidal capnography, reinforcement learning

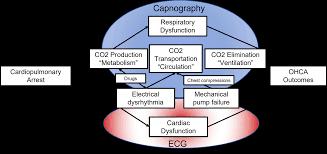

Artificial Intelligence (AI) and machine learning have advanced healthcare by defining relationships in complex conditions. Out-of-hospital cardiac arrest (OHCA) is a medically complex condition with several etiologies. Survival for OHCA has remained static at 10% for decades in the United States. Treatment of OHCA requires the coordination of numerous interventions, including the delivery of multiple medications. Current resuscitation algorithms follow a single strict pathway, regardless of fluctuating cardiac physiology. OHCA resuscitation requires a real-time biomarker that can guide interventions to improve outcomes. End tidal capnography (ETCO2) is commonly implemented by emergency medical services professionals in resuscitation and can serve as an ideal biomarker for resuscitation. However, there are no effective conceptual frameworks utilizing the continuous ETCO2 data. In this manuscript, we detail a conceptual framework using AI and machine learning techniques to leverage ETCO2 in guided resuscitation.

This publication proposes a conceptual framework for utilizing Artificial Intelligence (AI) and machine learning to create End Tidal Capnography (ETCO2) guided resuscitation for Out-of-Hospital Cardiac Arrest (OHCA). The aim is to move beyond rigid, fixed-interval resuscitation algorithms by leveraging continuous ETCO2 data as a real-time biomarker, alongside other physiological measurements, to develop personalized, dynamic interventions that are responsive to a patient’s evolving cardiac physiology. This approach seeks to improve the currently static survival rates for OHCA by enabling a deeper analysis of ETCO2 trends in relation to patient characteristics and interventions, potentially revealing “hidden” patterns and allowing for reward-based algorithms to guide optimal treatment strategies.

Conference on Innovative Applications of Artificial Intelligence (IAAI 2023)

February 7, 2023

reinforcement learning, online learning, mobile health, algorithm design, algorithm evaluation

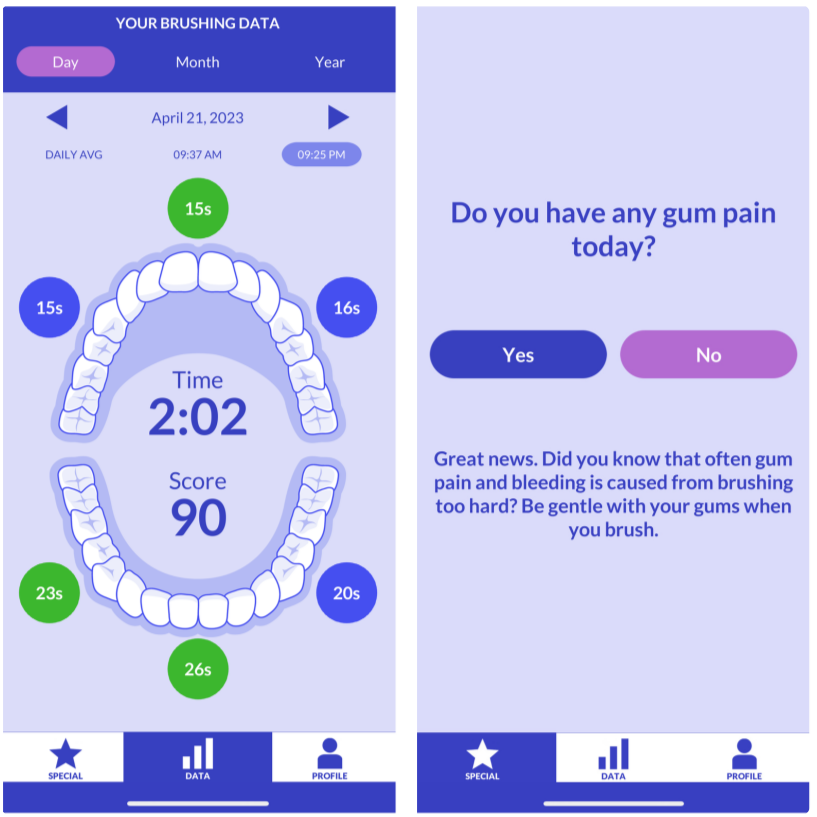

Dental disease is one of the most common chronic diseases despite being largely preventable. However, professional advice on optimal oral hygiene practices is often forgotten or abandoned by patients. Therefore patients may benefit from timely and personalized encouragement to engage in oral self-care behaviors. In this paper, we develop an online reinforcement learning (RL) algorithm for use in optimizing the delivery of mobile-based prompts to encourage oral hygiene behaviors. One of the main challenges in developing such an algorithm is ensuring that the algorithm considers the impact of the current action on the effectiveness of future actions (i.e., delayed effects), especially when the algorithm has been made simple in order to run stably and autonomously in a constrained, real-world setting (i.e., highly noisy, sparse data). We address this challenge by designing a quality reward which maximizes the desired health outcome (i.e., high-quality brushing) while minimizing user burden. We also highlight a procedure for optimizing the hyperparameters of the reward by building a simulation environment test bed and evaluating candidates using the test bed. The RL algorithm discussed in this paper will be deployed in Oralytics, an oral self-care app that provides behavioral strategies to boost patient engagement in oral hygiene practices.

In this paper, we develop an online reinforcement learning (RL) algorithm for use in optimizing the delivery of mobile-based prompts to encourage oral hygiene behaviors.

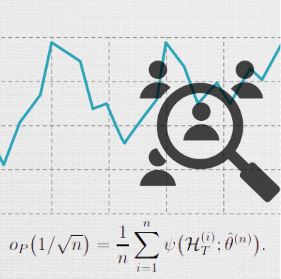

April 19, 2023

Online reinforcement learning and other adaptive sampling algorithms are increasingly used in digital intervention experiments to optimize treatment delivery for users over time. In this work, we focus on longitudinal user data collected by a large class of adaptive sampling algorithms that are designed to optimize treatment decisions online using accruing data from multiple users. Combining or “pooling” data across users allows adaptive sampling algorithms to potentially learn faster. However, by pooling, these algorithms induce dependence between the sampled user data trajectories; we show that this can cause standard variance estimators for i.i.d. data to underestimate the true variance of common estimators on this data type. We develop novel methods to perform a variety of statistical analyses on such adaptively sampled data via Z-estimation. Specifically, we introduce the adaptive sandwich variance estimator, a corrected sandwich estimator that leads to consistent variance estimates under adaptive sampling. Additionally, to prove our results we develop novel theoretical tools for empirical processes on non-i.i.d., adaptively sampled longitudinal data which may be of independent interest. This work is motivated by our efforts in designing experiments in which online reinforcement learning algorithms optimize treatment decisions, yet statistical inference is essential for conducting analyses after experiments conclude.

arXiv:2307.13916

October 31, 2023

contextual bandits, predicted context, online learning, machine learning

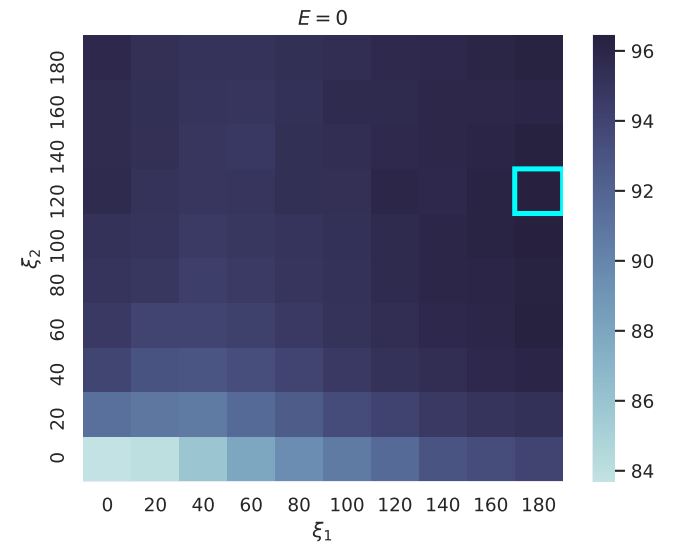

We consider the contextual bandit problem where at each time, the agent only has access to a noisy version of the context and the error variance (or an estimator of this variance). This setting is motivated by a wide range of applications where the true context for decision-making is unobserved, and only a prediction of the context by a potentially complex machine learning algorithm is available. When the context error is non-vanishing, classical bandit algorithms fail to achieve sublinear regret. We propose the first online algorithm in this setting with sublinear regret guarantees under mild conditions. The key idea is to extend the measurement error model in classical statistics to the online decision-making setting, which is nontrivial due to the policy being dependent on the noisy context observations. We further demonstrate the benefits of the proposed approach in simulation environments based on synthetic and real digital intervention datasets.

We propose the first online algorithm in this setting with sublinear regret guarantees under mild conditions.

arXiv:2305.18511

May 29, 2023

machine learning, optimization and control, contextual bandits, information reveal

Contextual bandit algorithms are commonly used in digital health to recommend personalized treatments. However, to ensure the effectiveness of the treatments, patients are often requested to take actions that have no immediate benefit to them, which we refer to as pro-treatment actions. In practice, clinicians have a limited budget to encourage patients to take these actions and collect additional information. We introduce a novel optimization and learning algorithm to address this problem. This algorithm effectively combines the strengths of two algorithmic approaches in a seamless manner, including 1) an online primal-dual algorithm for deciding the optimal timing to reach out to patients, and 2) a contextual bandit learning algorithm to deliver personalized treatment to the patient. We prove that this algorithm admits a sub-linear regret bound. We illustrate the usefulness of this algorithm on both synthetic and real-world data.

We present an innovative optimization and learning algorithm to tackle the challenge clinicians face with constrained budgets, aiming to incentivize patients to take actions and gather additional information.

JMIR Formative Research

December 11, 2023

contextual bandits, bandit algorithms, non-stationarity

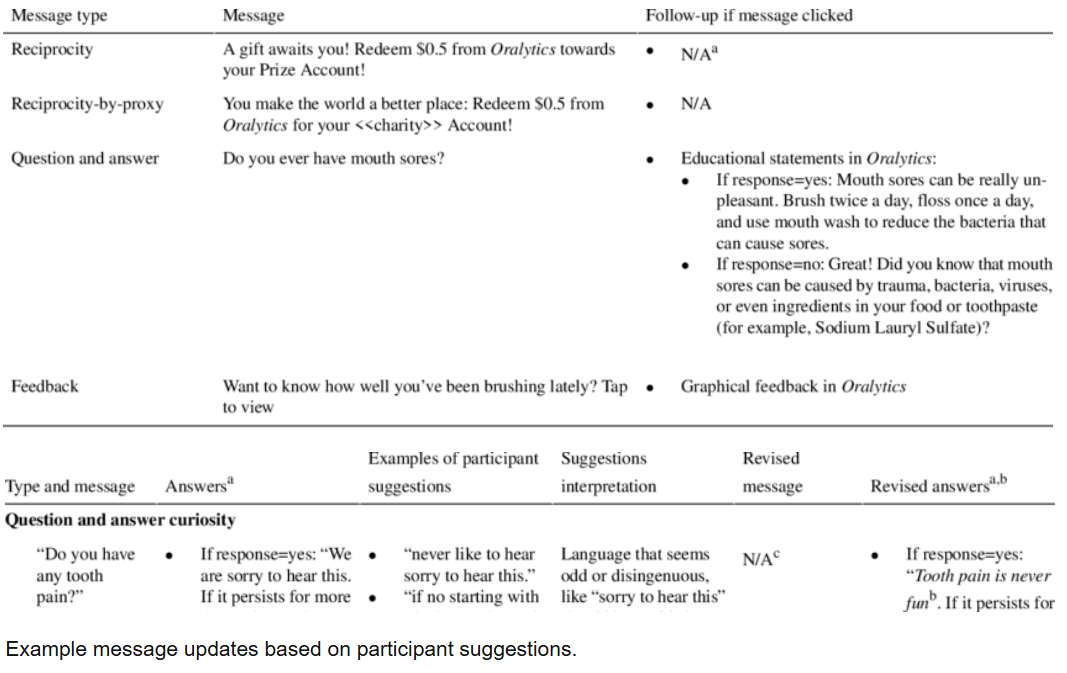

This publication describes research focused on creating effective digital messages to promote oral self-care, particularly daily toothbrushing, among diverse racial and ethnic minority groups. The goal was to develop engaging and appealing content for a smartphone application that would encourage better oral health habits. The development process involved collaborative design with dental experts and members of the target population, using web-based sessions to gather feedback while prioritizing participant anonymity and confidentiality. The findings indicate a preference for clear, enthusiastic, and relatable messages, suggesting valuable insights for future digital health interventions aimed at improving public health behaviors in vulnerable communities.

This publication details formative research aimed at developing and refining digital oral health messages for a smartphone app called Oralytics, targeting diverse racial and ethnic minority populations to promote daily toothbrushing. The study utilized theoretically grounded strategies such as reciprocity, reciprocity-by-proxy, and curiosity to enhance engagement with oral self-care behaviors, the app, and the messages themselves. Messages were developed using a web-based participatory co-design approach involving dental experts, Amazon Mechanical Turk workers, and dental patients. This approach notably focused on mitigating anonymity and confidentiality concerns during participant feedback sessions, which were conducted via facilitator-mediated Zoom webinars. Participants rated the messages highly, with qualitative feedback emphasizing a preference for messages that were straightforward, enthusiastic, conversational, relatable, and authentic. The research provides insights into designing engaging digital health interventions for underserved populations, stressing the importance of identifying key stimuli, gathering multiple perspectives, and employing innovative, secure data collection methods.

arXiv: 2402.03110

February 3, 2024

contextual bandits, bandit algorithms, non-stationarity

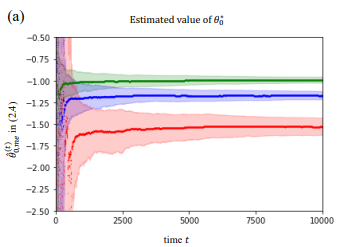

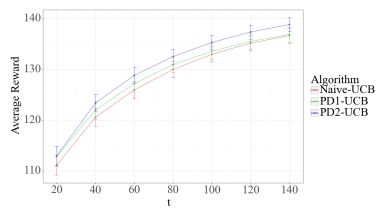

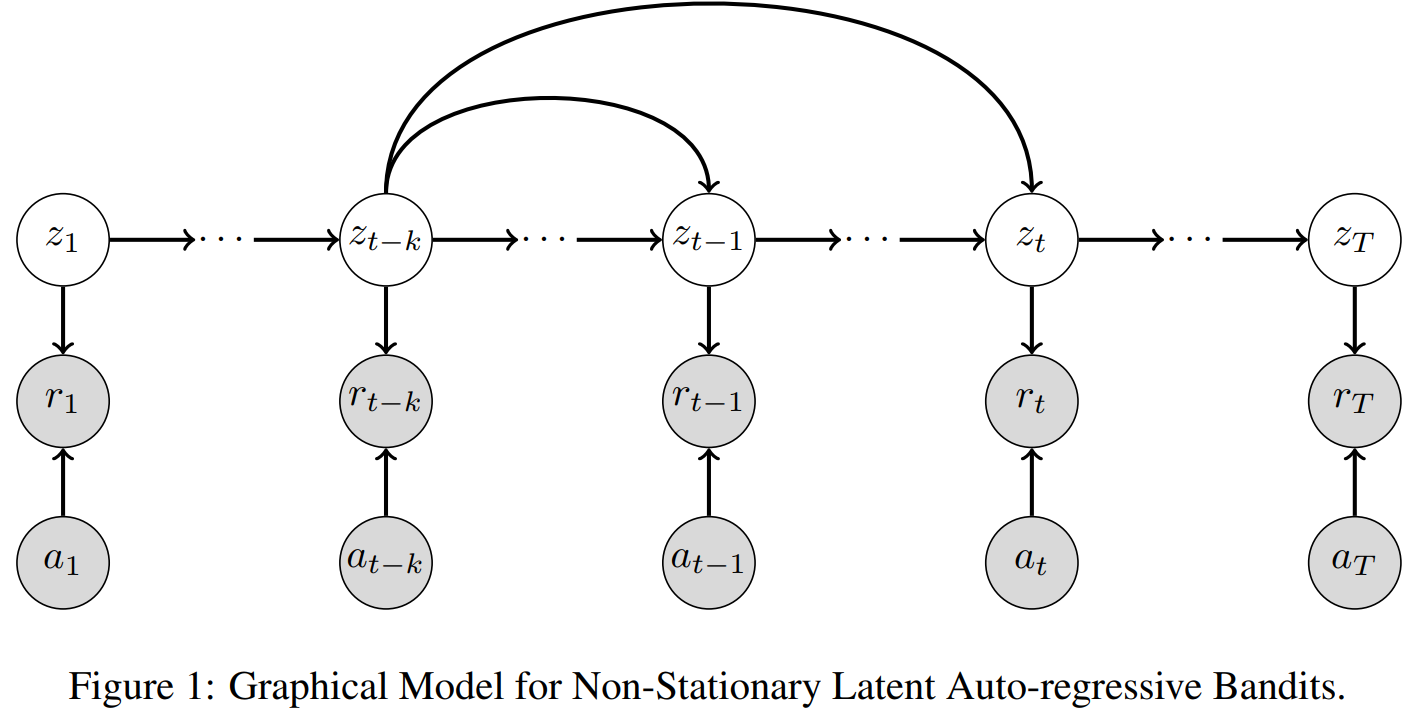

For the non-stationary multi-armed bandit (MAB) problem, many existing methods allow a general mechanism for the non-stationarity, but rely on a budget for the non-stationarity that is sub-linear to the total number of time steps . In many real-world settings, however, the mechanism for the non-stationarity can be modeled, but there is no budget for the non-stationarity. We instead consider the non-stationary bandit problem where the reward means change due to a latent, auto-regressive (AR) state. We develop Latent AR LinUCB (LARL), an online linear contextual bandit algorithm that does not rely on the non-stationary budget, but instead forms good predictions of reward means by implicitly predicting the latent state. The key idea is to reduce the problem to a linear dynamical system which can be solved as a linear contextual bandit. In fact, LARL approximates a steady-state Kalman filter and efficiently learns system parameters online. We provide an interpretable regret bound for LARL with respect to the level of non-stationarity in the environment. LARL achieves sub-linear regret in this setting if the noise variance of the latent state process is sufficiently small with respect to . Empirically, LARL outperforms various baseline methods in this non-stationary bandit problem.

This paper introduces Latent AR LinUCB (LARL), an efficient online algorithm designed for non-stationary multi-armed bandit (MAB) problems. Unlike many existing methods that rely on a budget for non-stationarity, LARL addresses settings where reward means change due to an unbudgeted, latent, auto-regressive (AR) state. It effectively forms good predictions of reward means by implicitly predicting the latent state. This is achieved by reducing the problem to a linear dynamical system solvable as a linear contextual bandit, which approximates a steady-state Kalman filter and learns parameters online. LARL achieves sub-linear regret under specific conditions related to the latent state noise variance and outperforms various stationary and non-stationary baseline methods in simulations.

arXiv: 2409.02069

September 3, 2024

Reinforcement Learning, mHealth, Clinical Trials, Oral Health, Oral Self-Care Behaviors, Thompson Sampling, Contextual Bandits, Deployment, Replicability, Adaptive Interventions

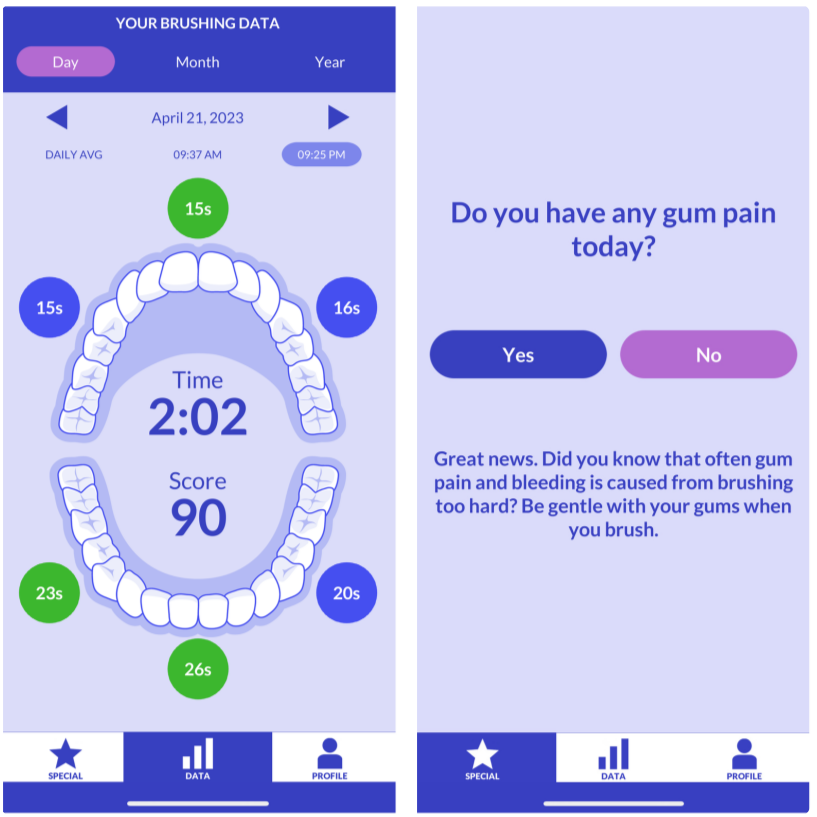

Dental disease is a prevalent chronic condition associated with substantial financial burden, personal suffering, and increased risk of systemic diseases. Despite widespread recommendations for twice-daily tooth brushing, adherence to recommended oral self-care behaviors remains sub-optimal due to factors such as forgetfulness and disengagement. To address this, we developed Oralytics, a mHealth intervention system designed to complement clinician-delivered preventative care for marginalized individuals at risk for dental disease. Oralytics incorporates an online reinforcement learning algorithm to determine optimal times to deliver intervention prompts that encourage oral self-care behaviors. We have deployed Oralytics in a registered clinical trial. The deployment required careful design to manage challenges specific to the clinical trials setting in the U.S. In this paper, we (1) highlight key design decisions of the RL algorithm that address these challenges and (2) conduct a re-sampling analysis to evaluate algorithm design decisions. A second phase (randomized control trial) of Oralytics is planned to start in spring 2025.

This paper introduces Oralytics, an mHealth intervention system that leverages an online reinforcement learning (RL) algorithm to optimize the delivery of engagement prompts aimed at improving oral self-care behaviors (OSCB) in individuals at risk for dental disease. The authors detail the key design decisions for deploying this RL algorithm within a registered clinical trial setting, addressing challenges such as ensuring autonomy and replicability through methods like pre-scheduling actions and implementing fallback procedures, and dealing with limited per-individual data by using a full-pooling approach. Through re-sampling analysis, the study provides evidence that the algorithm successfully learned to identify states where sending prompts was effective or ineffective.

arXiv: 2409.02069

September 3, 2024

Reinforcement Learning, mHealth, Clinical Trials, Oral Health, Oral Self-Care Behaviors, Thompson Sampling, Contextual Bandits, Deployment, Replicability, Adaptive Interventions

Dental disease is a prevalent chronic condition associated with substantial financial burden, personal suffering, and increased risk of systemic diseases. Despite widespread recommendations for twice-daily tooth brushing, adherence to recommended oral self-care behaviors remains sub-optimal due to factors such as forgetfulness and disengagement. To address this, we developed Oralytics, a mHealth intervention system designed to complement clinician-delivered preventative care for marginalized individuals at risk for dental disease. Oralytics incorporates an online reinforcement learning algorithm to determine optimal times to deliver intervention prompts that encourage oral self-care behaviors. We have deployed Oralytics in a registered clinical trial. The deployment required careful design to manage challenges specific to the clinical trials setting in the U.S. In this paper, we (1) highlight key design decisions of the RL algorithm that address these challenges and (2) conduct a re-sampling analysis to evaluate algorithm design decisions. A second phase (randomized control trial) of Oralytics is planned to start in spring 2025.

This paper introduces Oralytics, an mHealth intervention system that leverages an online reinforcement learning (RL) algorithm to optimize the delivery of engagement prompts aimed at improving oral self-care behaviors (OSCB) in individuals at risk for dental disease. The authors detail the key design decisions for deploying this RL algorithm within a registered clinical trial setting, addressing challenges such as ensuring autonomy and replicability through methods like pre-scheduling actions and implementing fallback procedures, and dealing with limited per-individual data by using a full-pooling approach. Through re-sampling analysis, the study provides evidence that the algorithm successfully learned to identify states where sending prompts was effective or ineffective.

JMIR Formative Research

December 11, 2023

engagement, oral health, mobile health intervention, racial and ethnic minority group, message development

Background: The prevention of oral health diseases is a key public health issue and a major challenge for racial and ethnic minority groups, who often face barriers in accessing dental care. Daily toothbrushing is an important self-care behavior necessary for sustaining good oral health, yet engagement in regular brushing remains a challenge. Identifying strategies to promote engagement in regular oral self-care behaviors among populations at risk of poor oral health is critical.

Objective: The formative research described here focused on creating messages for a digital oral self-care intervention targeting a racially and ethnically diverse population. Theoretically grounded strategies (reciprocity, reciprocity-by-proxy, and curiosity) were used to promote engagement in 3 aspects: oral self-care behaviors, an oral care smartphone app, and digital messages. A web-based participatory co-design approach was used to develop messages that are resource efficient, appealing, and novel; this approach involved dental experts, individuals from the general population, and individuals from the target population—dental patients from predominantly low-income racial and ethnic minority groups. Given that many individuals from racially and ethnically diverse populations face anonymity and confidentiality concerns when participating in research, we used an approach to message development that aimed to mitigate these concerns.

Methods: Messages were initially developed with feedback from dental experts and Amazon Mechanical Turk workers. Dental patients were then recruited for 2 facilitator-mediated group webinar sessions held over Zoom (Zoom Video Communications; session 1: n=13; session 2: n=7), in which they provided both quantitative ratings and qualitative feedback on the messages. Participants interacted with the facilitator through Zoom polls and a chat window that was anonymous to other participants. Participants did not directly interact with each other, and the facilitator mediated sessions by verbally asking for message feedback and sharing key suggestions with the group for additional feedback. This approach plausibly enhanced participant anonymity and confidentiality during the sessions.

Results: Participants rated messages highly in terms of liking (overall rating: mean 2.63, SD 0.58; reciprocity: mean 2.65, SD 0.52; reciprocity-by-proxy: mean 2.58, SD 0.53; curiosity involving interactive oral health questions and answers: mean 2.45, SD 0.69; curiosity involving tailored brushing feedback: mean 2.77, SD 0.48) on a scale ranging from 1 (do not like it) to 3 (like it). Qualitative feedback indicated that the participants preferred messages that were straightforward, enthusiastic, conversational, relatable, and authentic.

Conclusions: This formative research has the potential to guide the design of messages for future digital health behavioral interventions targeting individuals from diverse racial and ethnic populations. Insights emphasize the importance of identifying key stimuli and tasks that require engagement, gathering multiple perspectives during message development, and using new approaches for collecting both quantitative and qualitative data while mitigating anonymity and confidentiality concerns.

The formative research described here focused on creating messages for a digital oral self-care intervention targeting a racially and ethnically diverse population. Theoretically grounded strategies (reciprocity, reciprocity-by-proxy, and curiosity) were used to promote engagement in 3 aspects: oral self-care behaviors, an oral care smartphone app, and digital messages.

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies

mHealth, brushing detection, flossing detection, hand-to-mouth gestures

June 2021

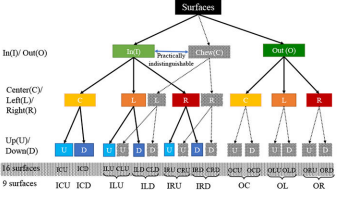

Ensuring that all the teeth surfaces are adequately covered during daily brushing can reduce the risk of several oral diseases. In this paper, we propose the mTeeth model to detect teeth surfaces being brushed with a manual toothbrush in the natural free-living environment using wrist-worn inertial sensors. To unambiguously label sensor data corresponding to different surfaces and capture all transitions that last only milliseconds, we present a lightweight method to detect the micro-event of brushing strokes that cleanly demarcates transitions among brushing surfaces. Using features extracted from brushing strokes, we propose a Bayesian Ensemble method that leverages the natural hierarchy among teeth surfaces and patterns of transition among them. For training and testing, we enrich a publicly-available wrist-worn inertial sensor dataset collected from the natural environment with time-synchronized precise labels of brushing surface timings and moments of transition. We annotate 10,230 instances of brushing on different surfaces from 114 episodes and evaluate the impact of wide between-person and within-person between-episode variability on machine learning model’s performance for brushing surface detection.

In this paper, we propose the mTeeth model to detect teeth surfaces being brushed with a manual toothbrush in the natural free-living environment using wrist-worn inertial sensors.

You must be logged in to post a comment.

No Comments