The mDOT Center

Transforming health and wellness via temporally-precise mHealth interventions

mDOT@MD2K.org

901.678.1526

901.678.1526

Collaborating Investigator:

Dr. Inbal Nahum-Shani, University of Michigan

Funding Status:

NIH/NIDA

9/1/21 – 6/30/26

Associated with:

Nassal, M. Sugavanam, N., Aramendi, E., Jaureguibeitia, X., Elola, A., Panchal, A., Ulintz, A., Wang, H., Ertin, E.

Proceedings of the 57th Annual Hawaii International Conference on System Sciences, HICSS 2024

January 3, 2024

Artificial Intelligence (AI), cardiac arrest, resuscitation, end tidal capnography, reinforcement learning

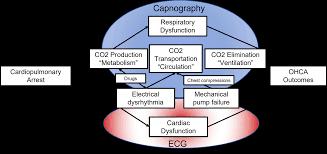

Artificial Intelligence (AI) and machine learning have advanced healthcare by defining relationships in complex conditions. Out-of-hospital cardiac arrest (OHCA) is a medically complex condition with several etiologies. Survival for OHCA has remained static at 10% for decades in the United States. Treatment of OHCA requires the coordination of numerous interventions, including the delivery of multiple medications. Current resuscitation algorithms follow a single strict pathway, regardless of fluctuating cardiac physiology. OHCA resuscitation requires a real-time biomarker that can guide interventions to improve outcomes. End tidal capnography (ETCO2) is commonly implemented by emergency medical services professionals in resuscitation and can serve as an ideal biomarker for resuscitation. However, there are no effective conceptual frameworks utilizing the continuous ETCO2 data. In this manuscript, we detail a conceptual framework using AI and machine learning techniques to leverage ETCO2 in guided resuscitation.

This publication proposes a conceptual framework for utilizing Artificial Intelligence (AI) and machine learning to create End Tidal Capnography (ETCO2) guided resuscitation for Out-of-Hospital Cardiac Arrest (OHCA). The aim is to move beyond rigid, fixed-interval resuscitation algorithms by leveraging continuous ETCO2 data as a real-time biomarker, alongside other physiological measurements, to develop personalized, dynamic interventions that are responsive to a patient’s evolving cardiac physiology. This approach seeks to improve the currently static survival rates for OHCA by enabling a deeper analysis of ETCO2 trends in relation to patient characteristics and interventions, potentially revealing “hidden” patterns and allowing for reward-based algorithms to guide optimal treatment strategies.

Conference on Innovative Applications of Artificial Intelligence (IAAI 2023)

February 7, 2023

reinforcement learning, online learning, mobile health, algorithm design, algorithm evaluation

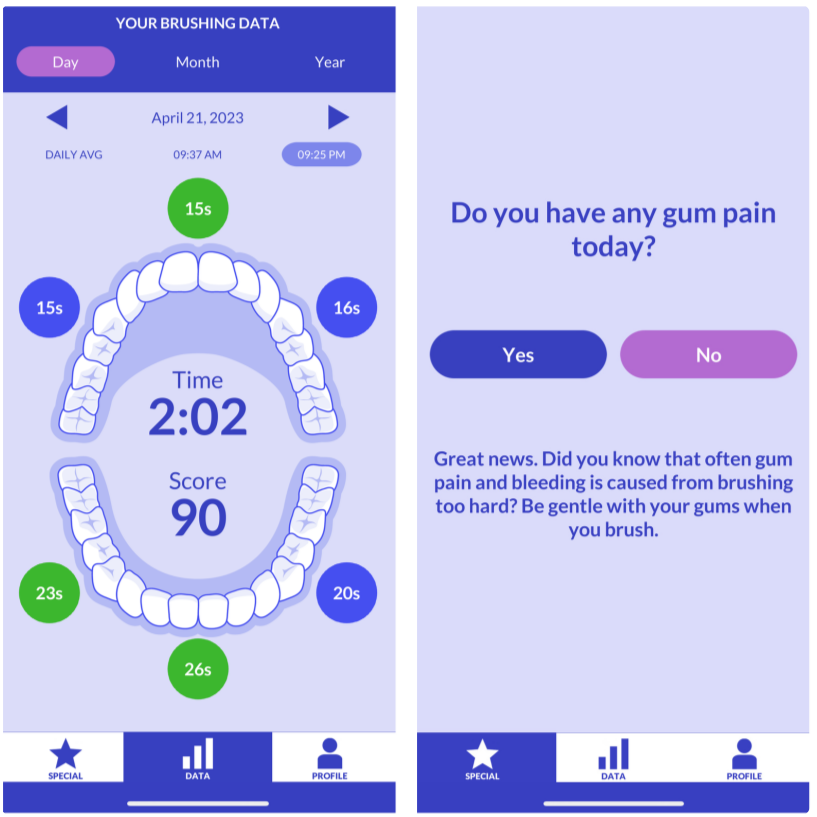

Dental disease is one of the most common chronic diseases despite being largely preventable. However, professional advice on optimal oral hygiene practices is often forgotten or abandoned by patients. Therefore patients may benefit from timely and personalized encouragement to engage in oral self-care behaviors. In this paper, we develop an online reinforcement learning (RL) algorithm for use in optimizing the delivery of mobile-based prompts to encourage oral hygiene behaviors. One of the main challenges in developing such an algorithm is ensuring that the algorithm considers the impact of the current action on the effectiveness of future actions (i.e., delayed effects), especially when the algorithm has been made simple in order to run stably and autonomously in a constrained, real-world setting (i.e., highly noisy, sparse data). We address this challenge by designing a quality reward which maximizes the desired health outcome (i.e., high-quality brushing) while minimizing user burden. We also highlight a procedure for optimizing the hyperparameters of the reward by building a simulation environment test bed and evaluating candidates using the test bed. The RL algorithm discussed in this paper will be deployed in Oralytics, an oral self-care app that provides behavioral strategies to boost patient engagement in oral hygiene practices.

In this paper, we develop an online reinforcement learning (RL) algorithm for use in optimizing the delivery of mobile-based prompts to encourage oral hygiene behaviors.

April 19, 2023

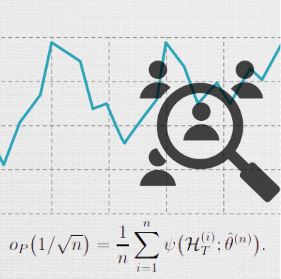

Online reinforcement learning and other adaptive sampling algorithms are increasingly used in digital intervention experiments to optimize treatment delivery for users over time. In this work, we focus on longitudinal user data collected by a large class of adaptive sampling algorithms that are designed to optimize treatment decisions online using accruing data from multiple users. Combining or “pooling” data across users allows adaptive sampling algorithms to potentially learn faster. However, by pooling, these algorithms induce dependence between the sampled user data trajectories; we show that this can cause standard variance estimators for i.i.d. data to underestimate the true variance of common estimators on this data type. We develop novel methods to perform a variety of statistical analyses on such adaptively sampled data via Z-estimation. Specifically, we introduce the adaptive sandwich variance estimator, a corrected sandwich estimator that leads to consistent variance estimates under adaptive sampling. Additionally, to prove our results we develop novel theoretical tools for empirical processes on non-i.i.d., adaptively sampled longitudinal data which may be of independent interest. This work is motivated by our efforts in designing experiments in which online reinforcement learning algorithms optimize treatment decisions, yet statistical inference is essential for conducting analyses after experiments conclude.

Contemporary Clinical Trials

Volume 145

October 2024

Just-in-Time Adaptive Interventions, mHealth, cannabis use, emerging adults, micro-randomized trial, reinforcement learning, mobile app-based intervention.

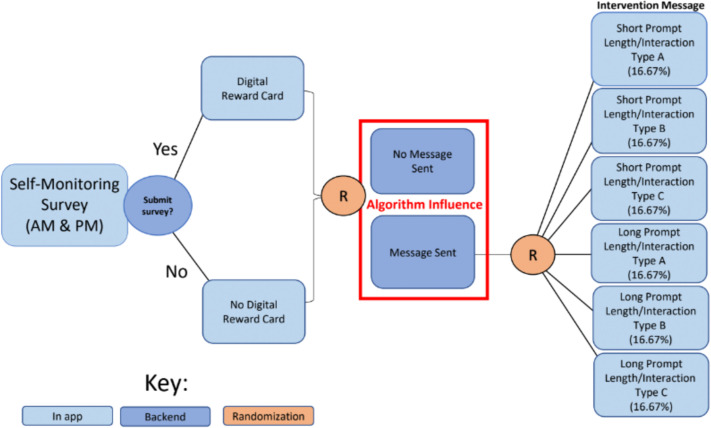

Emerging adult (EA) cannabis use is associated with increased risk for health consequences. Just-in-time adaptive interventions (JITAIs) provide potential for preventing the escalation and consequences of cannabis use. Powered by mobile devices, JITAIs use decision rules that take the person’s state and context as input, and output a recommended intervention (e.g., alternative activities, coping strategies). The mHealth literature on JITAIs is nascent, with additional research needed to identify what intervention content to deliver when and to whom. Herein we describe the protocol for a pilot study testing the feasibility and acceptability of a micro-randomized trial for optimizing MiWaves mobile intervention app for EAs (ages 18–25; target N = 120) with regular cannabis use (≥3 times per week). Micro-randomizations will be determined by a reinforcement learning algorithm that continually learns and improves the decision rules as participants experience the intervention. MiWaves will prompt participants to complete an in-app twice-daily survey over 30 days and participants will be micro-randomized twice daily to either: no message or a message [1 of 6 types varying in length (short, long) and interaction type (acknowledge message, acknowledge message + click additional resources, acknowledge message + fill in the blank/select an option)]. Participants recruited via social media will download the MiWaves app, and complete screening, baseline, weekly, post-intervention, and 2-month follow-up assessments. Primary outcomes include feasibility and acceptability, with additional exploratory behavioral outcomes. This study represents a critical first step in developing an effective mHealth intervention for reducing cannabis use and associated harms in EAs.

mHealth app uses reinforcement learning in a micro-randomized trial to deliver personalized interventions aiming to reduce cannabis use in emerging adults.

arXiv preprint: 2402.17739

June 11, 2024

reBandit, Reinforcement Learning (RL), Mobile Health (mHealth), Cannabis Use Disorder (CUD), Emerging Adults (EAs), Personalized Interventions, Random Effects, Bayesian Priors, Online Algorithm, Just-in-Time Adaptive Interventions (JITAIs), Simulation, Public Health.

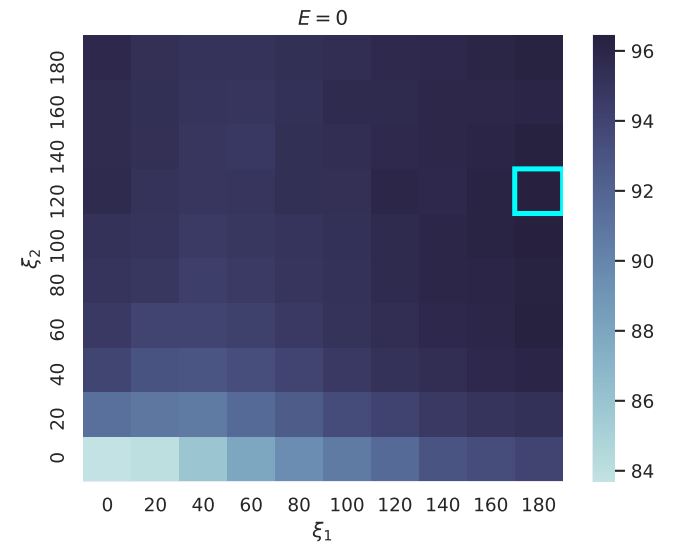

The escalating prevalence of cannabis use, and associated cannabis-use disorder (CUD), poses a significant public health challenge globally. With a notably wide treatment gap, especially among emerging adults (EAs; ages 18-25), addressing cannabis use and CUD remains a pivotal objective within the 2030 United Nations Agenda for Sustainable Development Goals (SDG). In this work, we develop an online reinforcement learning (RL) algorithm called reBandit which will be utilized in a mobile health study to deliver personalized mobile health interventions aimed at reducing cannabis use among EAs. reBandit utilizes random effects and informative Bayesian priors to learn quickly and efficiently in noisy mobile health environments. Moreover, reBandit employs Empirical Bayes and optimization techniques to autonomously update its hyper-parameters online. To evaluate the performance of our algorithm, we construct a simulation testbed using data from a prior study, and compare against commonly used algorithms in mobile health studies. We show that reBandit performs equally well or better than all the baseline algorithms, and the performance gap widens as population heterogeneity increases in the simulation environment, proving its adeptness to adapt to diverse population of study participants.

This paper introduces reBandit, an online reinforcement learning (RL) algorithm designed to reduce cannabis use among emerging adults (ages 18-25) through personalized mobile health (mHealth) interventions. reBandit is built to learn quickly and efficiently in noisy mHealth environments by utilizing random effects and informative Bayesian priors, which allow it to both personalize treatments for individual users and leverage data from the broader population. The algorithm also autonomously updates its hyper-parameters online and is engineered to account for delayed effects of interventions. Simulations show that reBandit performs equally well or better than other common mHealth algorithms, with its performance advantage increasing as population heterogeneity rises.

(In Press) Annual Review of Psychology – Vol. 77 (2026)

Forthcoming 2026

Reinforcement Learning (RL), Personalized Interventions, Just-in-Time Adaptive Interventions (JITAIs), Public Health.

Forthcoming

Forthcoming 2026

arXiv: 2409.02069

September 3, 2024

Reinforcement Learning, mHealth, Clinical Trials, Oral Health, Oral Self-Care Behaviors, Thompson Sampling, Contextual Bandits, Deployment, Replicability, Adaptive Interventions

Dental disease is a prevalent chronic condition associated with substantial financial burden, personal suffering, and increased risk of systemic diseases. Despite widespread recommendations for twice-daily tooth brushing, adherence to recommended oral self-care behaviors remains sub-optimal due to factors such as forgetfulness and disengagement. To address this, we developed Oralytics, a mHealth intervention system designed to complement clinician-delivered preventative care for marginalized individuals at risk for dental disease. Oralytics incorporates an online reinforcement learning algorithm to determine optimal times to deliver intervention prompts that encourage oral self-care behaviors. We have deployed Oralytics in a registered clinical trial. The deployment required careful design to manage challenges specific to the clinical trials setting in the U.S. In this paper, we (1) highlight key design decisions of the RL algorithm that address these challenges and (2) conduct a re-sampling analysis to evaluate algorithm design decisions. A second phase (randomized control trial) of Oralytics is planned to start in spring 2025.

This paper introduces Oralytics, an mHealth intervention system that leverages an online reinforcement learning (RL) algorithm to optimize the delivery of engagement prompts aimed at improving oral self-care behaviors (OSCB) in individuals at risk for dental disease. The authors detail the key design decisions for deploying this RL algorithm within a registered clinical trial setting, addressing challenges such as ensuring autonomy and replicability through methods like pre-scheduling actions and implementing fallback procedures, and dealing with limited per-individual data by using a full-pooling approach. Through re-sampling analysis, the study provides evidence that the algorithm successfully learned to identify states where sending prompts was effective or ineffective.

Contemporary Clinical Trials

engagement, Micro-randomized trial (MRT), mobile health (mHealth), self-regulatory strategies, smoking cessation

November 2021

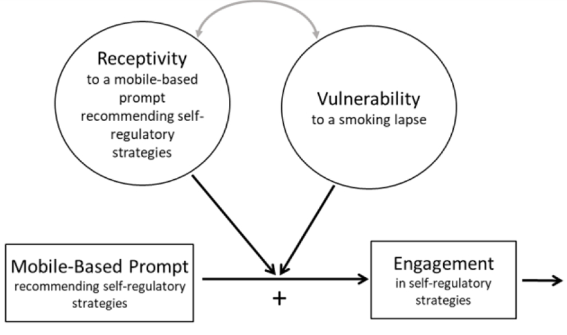

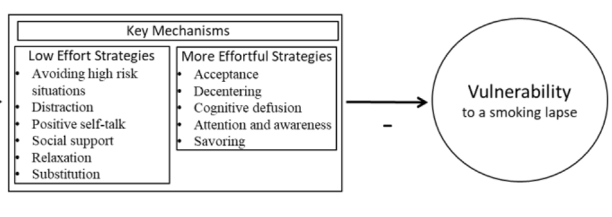

Smoking is the leading preventable cause of death and disability in the U.S. Empirical evidence suggests that engaging in evidence-based self-regulatory strategies (e.g., behavioral substitution, mindful attention) can improve smokers’ ability to resist craving and build self-regulatory skills. However, poor engagement represents a major barrier to maximizing the impact of self-regulatory strategies. This paper describes the protocol for Mobile Assistance for Regulating Smoking (MARS) – a research study designed to inform the development of a mobile health (mHealth) intervention for promoting real-time, real-world engagement in evidence-based self-regulatory strategies. The study will employ a 10-day Micro-Randomized Trial (MRT) enrolling 112 smokers attempting to quit. Utilizing a mobile smoking cessation app, the MRT will randomize each individual multiple times per day to either: (a) no intervention prompt; (b) a prompt recommending brief (low effort) cognitive and/or behavioral self-regulatory strategies; or (c) a prompt recommending more effortful cognitive or mindfulness-based strategies. Prompts will be delivered via push notifications from the MARS mobile app. The goal is to investigate whether, what type of, and under what conditions prompting the individual to engage in self-regulatory strategies increases engagement. The results will build the empirical foundation necessary to develop a mHealth intervention that effectively utilizes intensive longitudinal self-report and sensor-based assessments of emotions, context and other factors to engage an individual in the type of self-regulatory activity that would be most beneficial given their real-time, real-world circumstances. This type of mHealth intervention holds enormous potential to expand the reach and impact of smoking cessation treatments.

This paper describes the protocol for Mobile Assistance for Regulating Smoking (MARS) – a research study designed to inform the development of a mobile health (mHealth) intervention for promoting real-time, real-world engagement in evidence-based self-regulatory strategies.

The American Psychologist

October 7, 2022

engagement, digital interventions, affect, motivation, attention, AIM-ACT framework, behavior change, neurophysiological, real-world settings.

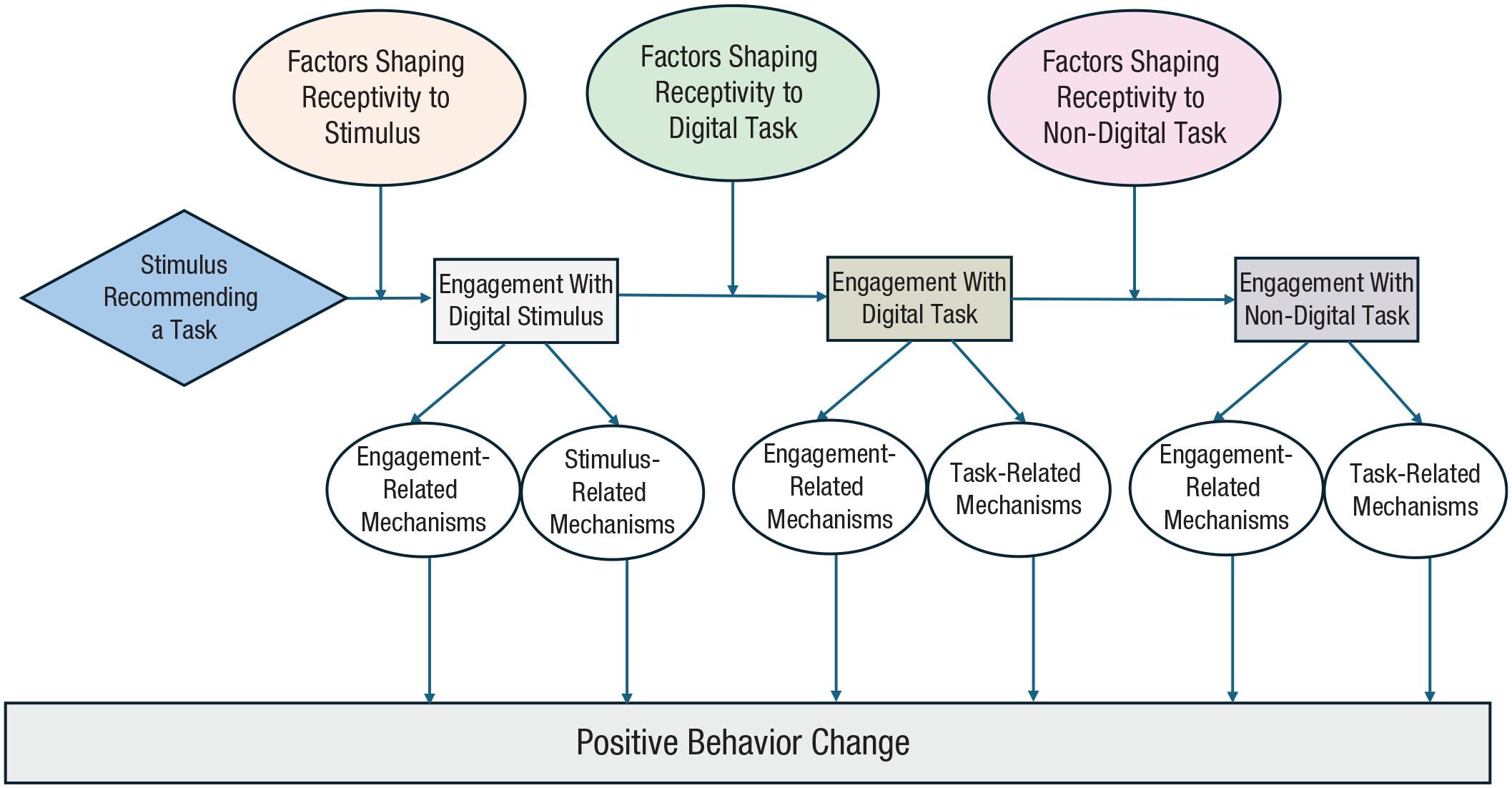

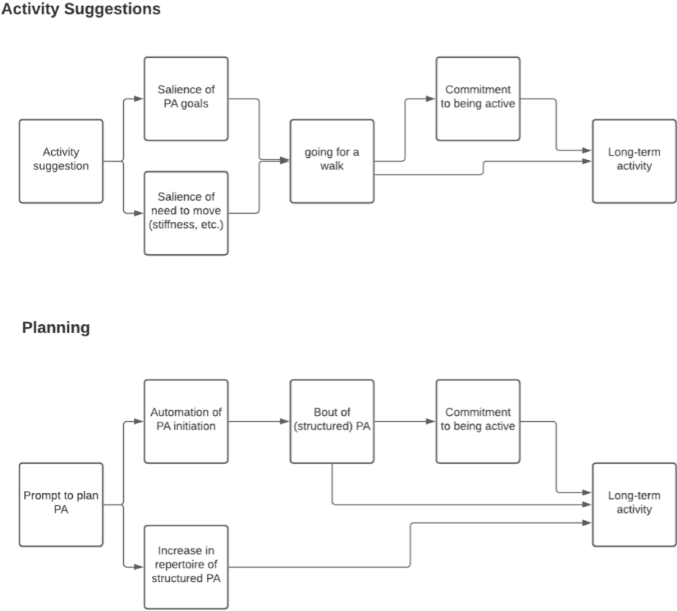

The notion of “engagement,” which plays an important role in various domains of psychology, is gaining increased currency as a concept that is critical to the success of digital interventions. However, engagement remains an ill-defined construct, with different fields generating their own domain-specific definitions. Moreover, given that digital interactions in real-world settings are characterized by multiple demands and choice alternatives competing for an individual’s effort and attention, they involve fast and often impulsive decision-making. Prior research seeking to uncover the mechanisms underlying engagement has nonetheless focused mainly on psychological factors and social influences and neglected to account for the role of neural mechanisms that shape individual choices. This article aims to integrate theories and empirical evidence across multiple domains to define engagement and discuss opportunities and challenges to promote effective engagement in digital interventions. We also propose the affect-integration-motivation and attention-context-translation (AIM-ACT) framework, which is based on a neurophysiological account of engagement, to shed new light on how in-the-moment engagement unfolds in response to a digital stimulus. Building on this framework, we provide recommendations for designing strategies to promote engagement in digital interventions and highlight directions for future research.

This publication addresses the ill-defined concept of “engagement” in digital interventions, which often suffer from high attrition rates. It synthesizes existing definitions to propose a new, clear definition of engagement as “a state of energy investment involving physical, affective, and cognitive energies directed toward a focal stimulus or task”. To understand how in-the-moment engagement unfolds in digital settings, the paper introduces the AIM-ACT framework. This neurophysiological framework explains how Affect, Integration, and Motivation (AIM) are modulated by Attention, Context, and the Translation of motivation to behavior (ACT) to promote positive behavior change in real-world settings. The AIM-ACT framework aims to guide future research and design strategies for effective digital interventions.

Psychological Methods

January 13, 2022

Lecture Notes in Computer Science (LNAI,volume 15734)

International Conference on Artificial Intelligence in Medicine

June 23, 2025

reBandit, Reinforcement Learning (RL), Mobile Health (mHealth), Cannabis Use Disorder (CUD), Emerging Adults (EAs), Personalized Interventions, Random Effects, Bayesian Priors, Online Algorithm, Just-in-Time Adaptive Interventions (JITAIs), Simulation, Public Health.

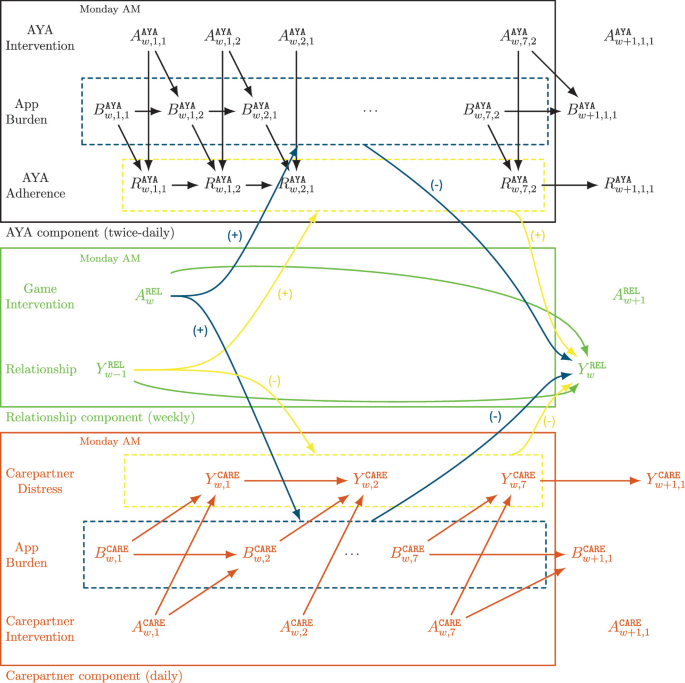

Medication adherence is critical for the recovery of adolescents and young adults (AYAs) who have undergone hematopoietic cell transplantation. However, maintaining adherence is challenging for AYAs after hospital discharge, who experience both individual (e.g. physical and emotional symptoms) and interpersonal barriers (e.g., relational difficulties with their care partner, who is often involved in medication management). To optimize the effectiveness of a three-component digital intervention targeting both members of the dyad as well as their relationship, we propose a novel Multi-Agent Reinforcement Learning (MARL) approach to personalize the delivery of interventions. By incorporating the domain knowledge, the MARL framework, where each agent is responsible for the delivery of one intervention component, allows for faster learning compared with a flattened agent. Evaluation using a dyadic simulator environment, based on real clinical data, shows a significant improvement in medication adherence (approximately 3%) compared to purely random intervention delivery. The effectiveness of this approach will be further evaluated in an upcoming trial.

This publication introduces a novel Multi-Agent Reinforcement Learning (MARL) approach to personalize the delivery of a three-component digital intervention (ADAPTS-HCT) aimed at improving medication adherence in adolescents and young adults (AYAs) who have undergone hematopoietic cell transplantation (HCT) and their care partners. The MARL framework, which assigns separate agents to the AYA, care partner, and relationship components, addresses challenges like multi-scale decision-making and noisy, data-limited settings by incorporating domain knowledge and using surrogate reward functions. Evaluation in a simulated dyadic environment, based on real clinical data, demonstrated a significant improvement of approximately 3% in medication adherence compared to purely random intervention delivery, with further evaluation planned in an upcoming clinical trial.

To appear in Volume 20 of the Annual Review of Clinical Psychology, 2023

2023

engagement, oral health, mobile health intervention, racial and ethnic minority group, message development

arXiv:2308.07843

November 3, 2023

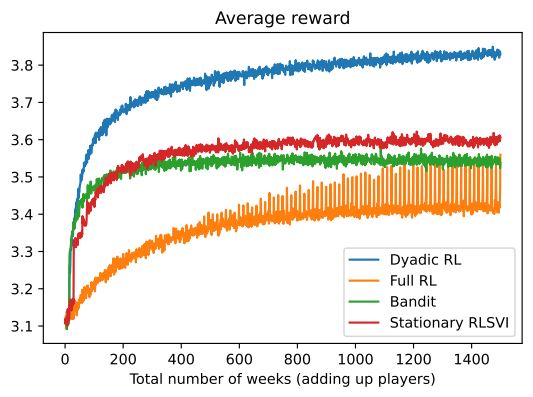

dyadic reinforcement learning, online learning, mobile health, algorithm design

Mobile health aims to enhance health outcomes by delivering interventions to individuals as they go about their daily life. The involvement of care partners and social support networks often proves crucial in helping individuals managing burdensome medical conditions. This presents opportunities in mobile health to design interventions that target the dyadic relationship — the relationship between a target person and their care partner — with the aim of enhancing social support. In this paper, we develop dyadic RL, an online reinforcement learning algorithm designed to personalize intervention delivery based on contextual factors and past responses of a target person and their care partner. Here, multiple sets of interventions impact the dyad across multiple time intervals. The developed dyadic RL is Bayesian and hierarchical. We formally introduce the problem setup, develop dyadic RL and establish a regret bound. We demonstrate dyadic RL’s empirical performance through simulation studies on both toy scenarios and on a realistic test bed constructed from data collected in a mobile health study.

In this paper, we develop dyadic RL, an online reinforcement learning algorithm designed to personalize intervention delivery based on contextual factors and past responses of a target person and their care partner.

The focus of the MAPS Center is the development, evaluation, and dissemination of novel research methodologies that are essential to optimize adaptive interventions to combat SUD/HIV. Project 2 focuses on developing innovative methods that will enable scientists, for the first time, to optimize the integration of human-delivered services with relatively low-intensity adaptation (i.e., adaptive interventions) and digital services with high-intensity adaptation (i.e., JITAIs). This project will develop a new trial design in which individuals can be randomized simultaneously to human-delivered and digital interventions at different time scales. This includes developing guidelines for trial design, sample size calculators, and statistical analysis methods that will enable scientists to use data from the new experimental design to address novel questions about synergies between human-delivered adaptive interventions and digital JITAIs. Project 3 focuses on developing innovative methods to optimize JITAIs in which the decision rules are continually updated to ensure effective adaptation as individual needs change and societal trends occur. Integrating approaches from artificial intelligence and statistics, this project will develop algorithms that continually update “population-based” decision rules (designed to work well for all individuals on average) to improve intervention effectiveness. This project will also generalize these algorithms to continually optimize “person-specific” decision rules for JITAIs. The algorithms will be designed specifically to (a) assign each individual the intervention that is right for them at a particular moment; (b) maintain acceptable levels of burden; and (c) maintain engagement.

Project 3 of MAPS aims to collaborate with TR&D2 (Murphy) by developing methods for appropriately pooling of data from multiple users to speed up learning of both population-based decision rules as well as personalized decision rules. These collaborations will used to enhance the impact of TR&D2’s Aims 2 and 3 and thus lay the foundation for successful future research projects. Project 3 of MAPS aims to collaborate with TR&D1 (Marlin) by utilizing advances by TR&D1 in propagating and representing uncertainty in Project 3’s development of methods for adapting the timing and location of delivery of different intervention prompts. These collaborations will increase the impact of TR&D1’s Aims 1 and 2. Project 2 of MAPS plans to collaborate with TR&D1 (Marlin) to develop a composite substance use risk indicator derived from sensor data that can be assessed at different time scales and hence can inform the adaptation of both human-delivered and digital interventions; and to collaborate with TR&D2 (Murphy) to develop optimization methods for learning what type and under what conditions digital interventions are best delivered in a setting in which non-digital interventions (human-delivered interventions) are also provided– this is an extreme case of TR&D2’s Aim 3 focused on multiple intervention components delivered at different time scales and with different short-term objectives. As such this collaboration has the potential to synergistically enhance both TR&D’s as well as MAP’s Project 2 aims.

You must be logged in to post a comment.

No Comments