The mDOT Center

Transforming health and wellness via temporally-precise mHealth interventions

mDOT@MD2K.org

901.678.1526

901.678.1526

Invitation-Only Think Tank on the Next Generation of Wearable Tech for Health & Wellness

Thursday, October 9, 2025 | 8:00 AM – 5:00 PM ET

The Visioning Think Tank on Generative AI-Powered Interactive mHealth Interventions is designed as a dynamic and forward-thinking event bringing together experts from academia and industry.

New advancements in Generative AI, especially large language models (LLMs), are creating powerful opportunities for intelligent, interactive health interventions that can generate high-quality content (text, audio, image, video) with fluency and contextual awareness. In combination with emerging wearable and sensor technologies, these models can be used to engage users in natural dialogue while interpreting real-time behavioral, physiological, and environmental data. This convergence holds tremendous potential to advance more engaging and effective just-in-time adaptive interventions (JITAIs)—an intervention approach that leverages rapidly changing information about a participant’s state and context to decide whether and how to deliver behavioral interventions in the moment.

This invitation-only Visioning Think Tank brings together leaders in behavioral science, AI, mobile health (mHealth), and human-computer interaction (HCI) to shape the next wave of innovation at the intersection of Generative AI, wearables, and JITAIs. Together, participants will envision how to develop and deploy the next generation of JITAIs that are supported by Generative AI to deliver highly engaging and effective behavioral interventions.

To showcase the current state of the field, this event will highlight emerging tools in audio and video content generation. These capabilities, while not targeting health directly, show how tools built for other domains can be translated into behavioral health while raising new questions about how to best deploy them in JITAI settings.

This Think Tank is a launch point for identifying priority use cases, exploring partnerships, and shaping a shared roadmap for how Generative AI + Wearables + JITAIs can work together to revolutionize behavioral health. Whether building platforms or applying them in practice, this forum will offer both inspiration and a foundation for profound and transformative collaboration.

The Think Tank will dive into the rapidly evolving intersection of Generative AI, wearable technologies, and behavioral health. Discover how these tools can unlock new ways to design just-in-time, adaptive interventions (JITAIs) that respond to individuals in the moment.

The Think Tank will engage in structured discussions to uncover the most promising use cases for Generative AI in mHealth, while examining key barriers – technical, clinical, and societal – that must be addressed to ensure responsible deployment.

The Think Tank will explore the ethical, privacy, and algorithmic challenges that arise when applying LLMs and generative models to health contexts. Gain insights into how to design systems that are transparent, equitable, and trustworthy.

A pivotal goal of the Think Tank is to develop a strategic roadmap. Work collaboratively to define a shared vision for the future of Generative AI-powered JITAIs. Identify key priorities, research gaps, and implementation pathways that can guide innovation over the next 3–5 years.

The closing session of the Think Tank will provide a platform for summarizing outcomes and contributions. Participants will share insights gained and discuss the next steps for the research community. The Think Tank aims to Build momentum beyond the event by outlining concrete next steps, forming new partnerships, and contributing to a growing community committed to advancing the science and impact of AI-driven behavioral interventions.

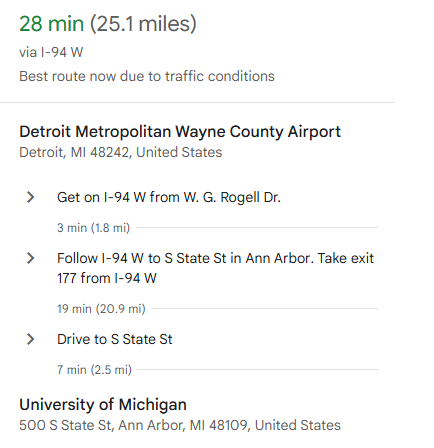

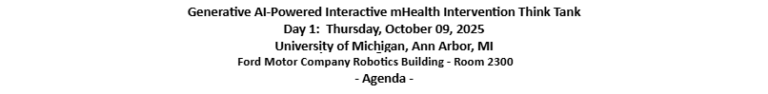

Ford Motor Corp Robotics Building

Room 2300

2505 Hayward St

Ann Arbor, MI 48109-2106

On The Campus of the University of Michigan

Ford Robotics Building

Room 2300

2505 Hayward St

Ann Arbor, MI

48109-2106

The majority of Think Tank attendees will be staying at the Campus-preferred hotel.

3600 Plymouth Rd,Ann Arbor, MI 48105USA

1-734-769-9800

For those staying at the DoubleTree Hotel: A hot breakfast is complimentary with your stay if booked through the reserved link: HERE

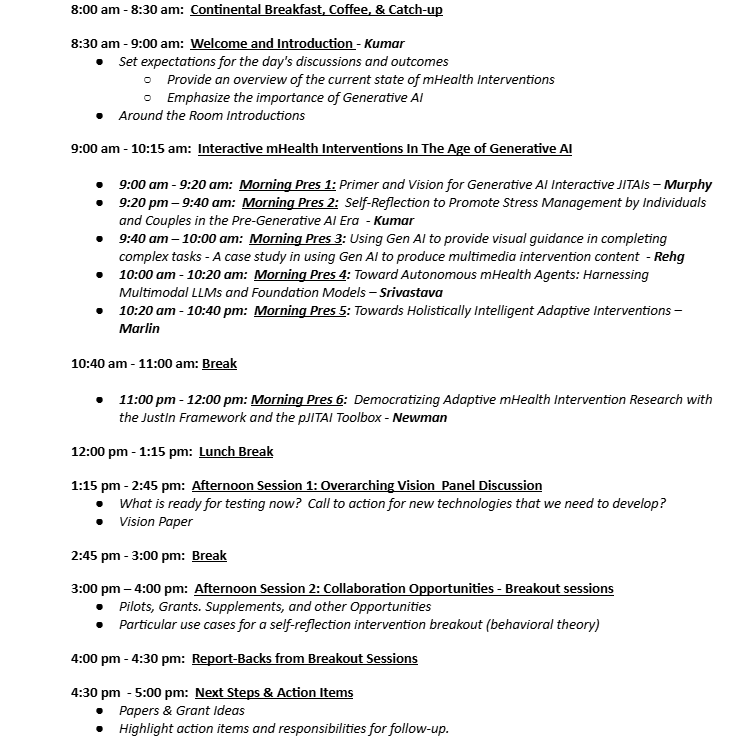

Continental breakfast, freshly brewed coffee, and hot tea will be available from 8:00 am onwards just outside the meeting room in Ford Robotics Building. Attendees are encouraged to join between 8:00 am and 8:30 am, allowing ample time for networking and catching up before the Think Tank begins.

For attendees staying at the DoubleTree Hotel, there will not be a shuttle to the conference venue.

Attendees are encouraged to carpool with fellow attendees at the Campus Hotel who may have rental cars or Uber/Lyft to the venue.

Once onsite at the venue, attendees will connect to the network and follow the registration information.

More information to come.

Ann Arbor is a dynamic mix of energy and charm—a bustling university town, a foodie’s paradise, and a cutting-edge tech hub, all wrapped in a walkable downtown bursting with world-class arts and culture. Located in Michigan’s southeast Lower Peninsula, Ann Arbor is the heartbeat of a vibrant network of communities across Washtenaw County. With its creative spirit, diverse flavors, and unique neighborhoods, Ann Arbor shines as a exciting oasis.

Ann Arbor is a city west of Detroit, in the Midwestern state of Michigan. It’s home to the sprawling University of Michigan, known for its research programs. The University of Michigan Museum of Art displays works from around the globe and spanning centuries. Science-focused attractions in the city include the Museum of Natural History and the Ann Arbor Hands-On Museum, with kid-friendly interactive exhibits.

Founded in 1824 with American settlers from the East Coast, it was incorporated as a city in 1851. The city is located on the Huron River. It is the principal city of its metropolitan area, and it is also included in the Detroit–Warren–Ann Arbor combined statistical area as well as the Great Lakes megalopolis. It had a population of 123,851 in 2020.

(Courtesy of Wikipedia, Ann Arbor.org).

Primer and Vision for Generative AI-Powered Interactive Self Reflection Interventions

Primer and Vision for Generative AI-Powered Interactive Self Reflection InterventionsThis presentation will introduce a vision for using generative AI, specifically Large Language Models (LLMs), as dynamic “sensors” to capture subjective states and contexts in mental health research. It will address the limitations of traditional Experience Sampling Methods (EMAs), which are often burdensome and lack nuance, and will propose leveraging LLMs to generate raw conversational data streams that can be processed into perception time series—enabling more flexible and personalized insights into individual internal experiences. Emphasis will be placed on establishing reliability and validity comparable to physiological sensors, while exploring applications that will range from passive data collection to interactive self-reflection interventions. By positioning LLMs as adaptive, language-based tools for understanding and supporting mental health, the presentation will outline a future pathway toward richer, more responsive approaches to assessing and enhancing human well-being.

Self-Reflection to Promote Stress Management by Individuals and Couples in the Pre-Generative AI Era

Self-Reflection to Promote Stress Management by Individuals and Couples in the Pre-Generative AI EraSelf-reflection can have profound impact on people to motivate them to initiate and sustain healthier behaviors. In the context of stress management, where the source of stress are so varied, providing them with the right kind of information is critical. This talk will showcase two successful applications of self-reflection. In the 100-day MOODS study, 122 participants were provided with visualization of their stress, stressors, and associated context. They reported self-initiating 14 different types of behavior changes and a significant reduction in both self-reported intensity and frequency of stressful events. In the couples study, 19 couples reviewed their stress and stressor data with each other and discovered many new life lessons about how they can better care for each other. The two studies show that self-reflection can help people self-initiate changes in their behaviors that can reduce stress for them, and for others that they closely interact with.

![University of Illinois at Urbana-Champaign Logo [UIUC | 02] - PNG Logo Vector Brand Downloads (SVG, EPS)](https://cdn.freelogovectors.net/wp-content/uploads/2023/06/university-of-illinois_at_urbana_champaign_logo-freelogovectors.net_.png) Using Gen AI to provide visual guidance in completing complex tasks – A case study in using Gen AI to produce multimedia intervention content

Using Gen AI to provide visual guidance in completing complex tasks – A case study in using Gen AI to produce multimedia intervention contentThere is growing interest in creating AI agents that could support individuals in performing complex tasks throughout their daily life. For example, a person wearing a pair of smart glasses who is facing a repair task for the first time, or assembling a new piece of furniture, could benefit from personalized instructions, delivered via the glasses, which are tailored to their particular context. This talk will describe an approach to generating an instructional image, meant to easily inform a participant on how they should perform a particular task. We assume we are given an image captured from an egocentric perspective, a first person view of the scene captured via smart glasses, along with a question about how to perform a task. The output is an automatically synthesized image in the same egocentric reference frame that illustrates the process of performing the action. I will describe our solution to the two technical challenges: 1) generating datasets with detailed action descriptions in a scalable way, and 2) adapting existing diffusion-based image generation models to produce images in the egocentric data domain.

Mobile health (mHealth) systems are rapidly evolving from smartphones and wearable based passive data collection platforms into autonomous agents that sense, reason, and act to support health and well-being. Large Language Models (LLMs) and related foundation models, including multimodal LLMs and vision-language models (VLMs), provide a powerful substrate for building such agents. Their ability to integrate world knowledge with sensor inputs enables new forms of reasoning over noisy, heterogeneous, unstructured data, and their generative capabilities open opportunities for naturalistic, human-centered actions such as adaptive feedback, guidance, and conversation. In this talk, drawing upon recent work from our group, I will outline how generative AI can power autonomous mHealth agents across the sensing–reasoning–action pipeline. I will conclude with key opportunities and open challenges in making these agents efficient and trustworthy.

Towards Holistically Intelligent Adaptive Interventions

Towards Holistically Intelligent Adaptive InterventionsThe use of reinforcement learning (RL) methods to support health behavior change via personalized and just-in-time adaptive interventions is of significant interest to health and behavioral science researchers focused on problems such as smoking cessation support and physical activity promotion. However, RL methods are often applied to these domains using a small collection of context variables to mitigate the significant data scarcity issues that arise from practical limitations on the design of adaptive intervention trials. In this talk, I will describe an approach to significantly expanding the state space of an adaptive intervention using a combination of participant-provided state descriptions and natural inference with pre-trained large language models. This approach is a first step towards the development of adaptive intervention agents that have a more holistic understanding of participants while retaining the ability of intervention designers to completely control intervention content.

Democratizing Adaptive mHealth Intervention Research with the JustIn Framework and the pJITAI

Democratizing Adaptive mHealth Intervention Research with the JustIn Framework and the pJITAIThis presentation introduces the Justin Framework, an open-source platform designed to democratize adaptive mobile health (mHealth) intervention research by streamlining the development of just-in-time adaptive interventions (JITAIs). Current approaches to building JITAIs are often costly, time-intensive, and technically demanding, requiring researchers to recreate core infrastructure for each new project. The Justin Framework addresses these challenges through a modular, layered architecture that supports three levels of interaction: a programming layer for developers, a configuration layer for technical researchers, and a graphical interface for behavioral scientists. This design enables users with varying technical expertise to efficiently build and deploy adaptive interventions. Key goals include reducing development costs and complexity, fostering collaboration, and supporting rapid, flexible intervention design. Envisioned as a consortium-based model similar to REDCap, the framework promotes shared innovation and resource exchange across institutions. Ultimately, the Justin Framework—together with the pJITAI Toolbox—advances a standardized, accessible foundation for accelerating behavioral science and adaptive mHealth intervention research.

Abstract Text

David Kennedy, PhD | Professor of Psychiatry | University of Massachusetts Medical School

Santosh Kumar, PhD | Lillian & Morrie Moss Chair of Excellence Professor | University of Memphis – Center Director, Lead PI, TR&D1, TR&D2, TR&D3

Jim Rehg, PhD | Founder Professor of Computer Science | University of Illinois Urbana-Champaign – Center Deputy Director, TR&D1 Lead

Susan Murphy, PhD | Professor of Statistics & Computer Science | Harvard University – TR&D2 Lead

Benjamin Marlin, PhD | Associate Professor | University of Massachusetts Amherst – Co-I, TR&D1, TR&D2

Emre Ertin, PhD | Associate Professor | The Ohio State University – TR&D3 Lead

Mani Srivastava, PhD | Professor of Electrical Engineering & Computer Science | University of California, Los Angeles – Co-I, TR&D3

Vivek Shetty, DDS, MD | Professor of Oral & Maxillofacial Surgery/Biomedical Engineering | University of California, Los Angeles – Training & Dissem. Lead

Inbal Nahum-Shani, PhD | Collegiate Research Professor at the University of Michigan | University of Michigan – d3c Center Director

Walter Dempsey, PhD | Associate Professor of Biostatistics | University of Michigan

Cait Hayward, PhD | Institute for Social Research | University of Michigan – d3c Center Managing Director

Pei-Yao Hung, PhD | Software Developer | University of Michigan

Jamie Yap | Data Scientist | University of Michigan

Susan Murphy, PhD | Professor of Statistics & Computer Science | Harvard University – PI

Shiyu Zhang, PhD | Center Research Investigator | University of Michigan

Lara Coughlin | Assistant Professor of Psychiatry | University of Michigan

Ewa Czyz | Associate Professor of Psychiatry | University of Michigan

John Dziak | Data Scientist | University of Michigan

Dave Fresco | Professor of Psychiatry | University of Michigan

Simon Goldberg | Associate Professor of Psychology | University of Wisconsin

Vik Kheterpal | Principal at CareEvolution, Inc.

Pedja Klasnja | Professor of Information | University of Michigan

Kristin Manella | Psychiatry, Taylor Lab | University of Michigan

Daniel McDuff | Staff Research Scientist and Manager @ Google | Co-Founder of RAIL

Mark Newman | Professor | University of Michigan

Mashfiqui Rabbi | Assistant Research Professor | University of Illinois Urbana-Champaign

Koustuv Saha | Assistant Professor | University of Illinois Urbana-Champaign

Rebecca Sripada | Associate Professor of Clinical Psychology | University of Michigan

Nathan Stohs | Embedded Systems Engineer | The Ohio State University

Hosnera Ahmed | Graduate Research Assistant | The University of Memphis

Yuyi Chang | Doctoral Student | The Ohio State University

Harish Haresamudram | Doctoral Student | University of Illinois Urbana-Champaign

Young Suh Hong | Doctoral Student | University of Michigan

Asim Gazi | Doctoral Student | Harvard University

Susobhan Ghosh | Doctoral Student | Harvard University

Bhanu Gullapalli | Doctoral Student | Harvard University

Xueqing Liu | Doctoral Student | Harvard University

Wanting Mao | Doctoral Student | University of Illinois Urbana-Champaign

Sameer Neupane | Doctoral Student | The University of Memphis

Mithun Saha | Doctoral Student | The University of Memphis

Sajal Shovon | Doctoral Student | The University of Memphis

Aditya Radhakrishnan | Doctoral Student | University of Illinois Urbana-Champaign

Maxwell Xu | Doctoral Student | University of Illinois Urbana-Champaign

Yi Yan | Graduate Research Associate | The Ohio State University

Kang Yang | Doctoral Student | UCLA

Director of Operations

Director, Research Data & Studies