The mDOT Center

Transforming health and wellness via temporally-precise mHealth interventions

mDOT@MD2K.org

901.678.1526

901.678.1526

Collaborating Investigator:

Dr. Predrag Klasnja, University of Michigan

Funding Status:

NIH/NCI

9/19/18 – 8/31/22

Associated with:

International Conference on Learning Representations (ICLR)

January 28, 2022

irregular sampling, uncertainty, imputation, interpolation, multivariate time series, missing data, variational autoencoder

In order to model and represent uncertainty in mHealth biomarkers to account for multifaceted uncertainty during momentary decision making in selecting, adapting, and delivering temporally-precise mHealth interventions. In this period, we extended our previous deep learning approach, Multi-Time Attention Networks, to enable improved representation of output uncertainty. Our new approach preserves the idea of learned temporal similarity functions and adds heteroskedastic output uncertainty. The new framework is referred to as the Heteroskedastic Variational Autoencoder and models real-valued multivariate data.

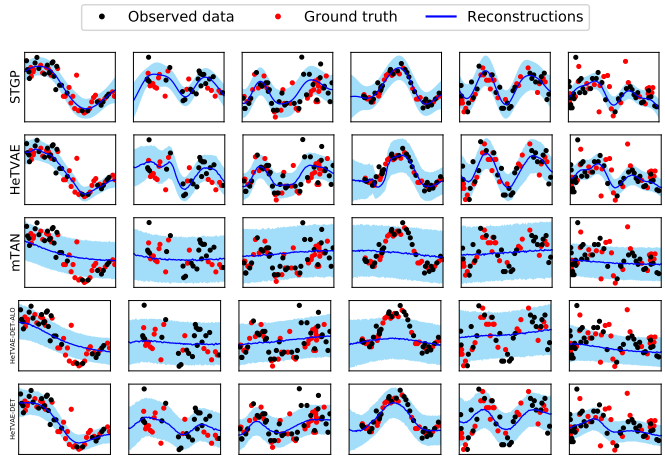

Irregularly sampled time series commonly occur in several domains where they present a significant challenge to standard deep learning models. In this paper, we propose a new deep learning framework for probabilistic interpolation of irregularly sampled time series that we call the Heteroscedastic Temporal Variational Autoencoder (HeTVAE). HeTVAE includes a novel input layer to encode information about input observation sparsity, a temporal VAE architecture to propagate uncertainty due to input sparsity, and a heteroscedastic output layer to enable variable uncertainty in output interpolations. Our results show that the proposed architecture is better able to reflect variable uncertainty through time due to sparse and irregular sampling than a range of baseline and traditional models, as well as recently proposed deep latent variable models that use homoscedastic output layers.

We present a new deep learning architecture for probabilistic interpolation of irregularly sampled time series.

IEEE/ACM international conference on Connected Health: Applications, Systems and Engineering Technologies (CHASE)

September 12, 2022

Bayesian inference, probabilistic programming, time series, missing data, Bayesian imputation, mobile health

We have developed a toolbox for the specification and estimation of mechanistic models in the dynamic bayesian network family. This toolbox focuses on making it easier to specify probabilistic dynamical models for time series data and to perform Bayesian inference and imputation in the specified model given incomplete data as input. The toolbox is referred to as BayesLDM. We have been working with members of CP3, CP4, and TR&D2 to develop offline data analysis and simulation models using this toolbox. We are also currently in discussions with members of CP4 to deploy the toolbox’s Bayesian imputation methods within a live controller optimization trial in the context of an adaptive walking intervention.

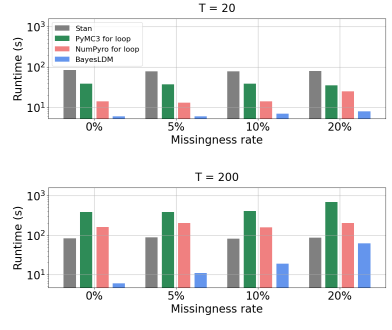

In this paper we present BayesLDM, a system for Bayesian longitudinal data modeling consisting of a high-level modeling language with specific features for modeling complex multivariate time series data coupled with a compiler that can produce optimized probabilistic program code for performing inference in the specified model. BayesLDM supports modeling of Bayesian network models with a specific focus on the efficient, declarative specification of dynamic Bayesian Networks (DBNs). The BayesLDM compiler combines a model specification with inspection of available data and outputs code for performing Bayesian inference for unknown model parameters while simultaneously handling missing data. These capabilities have the potential to significantly accelerate iterative modeling workflows in domains that involve the analysis of complex longitudinal data by abstracting away the process of producing computationally efficient probabilistic inference code. We describe the BayesLDM system components, evaluate the efficiency of representation and inference optimizations and provide an illustrative example of the application of the system to analyzing heterogeneous and partially observed mobile health data.

We present a a toolbox for the specification and estimation of mechanistic models in the dynamic bayesian network family.

Conference on Uncertainty in Artificial Intelligence (UAI 2023)

May 17, 2023

reinforcement learning, partial observability, context inference, adaptive interventions, empirical evaluation, mobile health

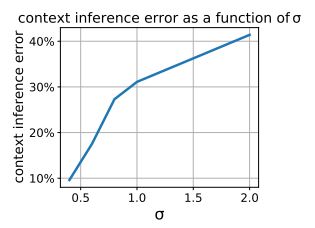

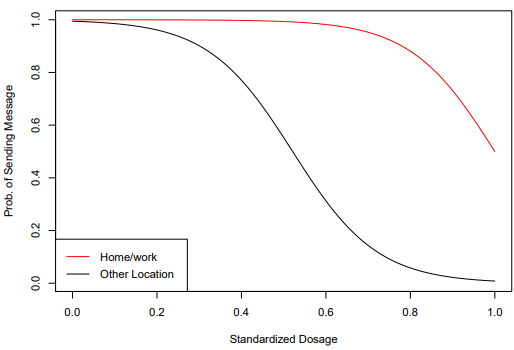

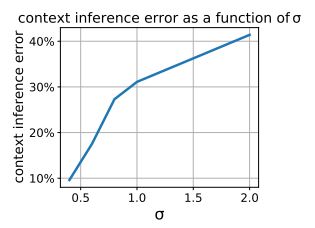

Just-in-Time Adaptive Interventions (JITAIs) are a class of personalized health interventions developed within the behavioral science community. JITAIs aim to provide the right type and amount of support by iteratively selecting a sequence of intervention options from a pre-defined set of components in response to each individual’s time varying state. In this work, we explore the application of reinforcement learning methods to the problem of learning intervention option selection policies. We study the effect of context inference error and partial observability on the ability to learn effective policies. Our results show that the propagation of uncertainty from context inferences is critical to improving intervention efficacy as context uncertainty increases, while policy gradient algorithms can provide remarkable robustness to partially observed behavioral state information.

This work focuses on JITAIs, personalized health interventions that dynamically select support components based on an individual’s changing state. The study applies reinforcement learning methods to learn policies for selecting intervention options, revealing that uncertainty from context inferences is crucial for enhancing intervention efficacy as context uncertainty increases.

arXiv: 2202.06891

February 14, 2022

sequential experiments, counterfactual inference, adaptive randomization, non-linear factor model, mixed effects model, nearest neighbors.

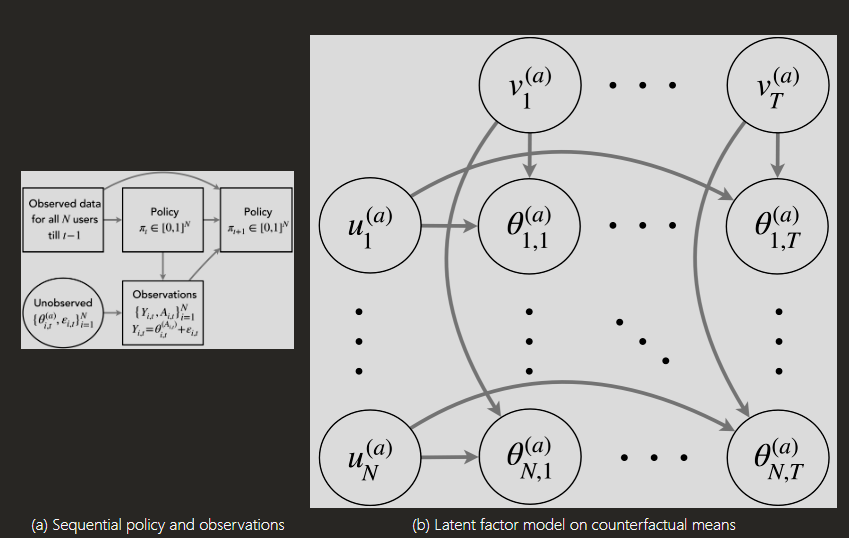

We consider after-study statistical inference for sequentially designed experiments wherein multiple units are assigned treatments for multiple time points using treatment policies that adapt over time. Our goal is to provide inference guarantees for the counterfactual mean at the smallest possible scale – mean outcome under different treatments for each unit and each time – with minimal assumptions on the adaptive treatment policy. Without any structural assumptions on the counterfactual means, this challenging task is infeasible due to more unknowns than observed data points. To make progress, we introduce a latent factor model over the counterfactual means that serves as a non-parametric generalization of the non-linear mixed effects model and the bilinear latent factor model considered in prior works. For estimation, we use a non-parametric method, namely a variant of nearest neighbors, and establish a non-asymptotic high probability error bound for the counterfactual mean for each unit and each time. Under regularity conditions, this bound leads to asymptotically valid confidence intervals for the counterfactual mean as the number of units and time points grows to together at suitable rates. We illustrate our theory via several simulations and a case study involving data from a mobile health clinical trial HeartSteps.

This publication presents a pioneering statistical inference method for sequential experiments where treatments are adaptively assigned to multiple units over time. It addresses the complex challenge of estimating unit-by-time level counterfactual outcomes by introducing a non-linear latent factor model and employing a nearest neighbors approach. The method provides non-asymptotic error bounds and asymptotically valid confidence intervals for counterfactual means, even with adaptive policies that pool data across units, making it highly applicable to real-world scenarios such as mobile health clinical trials like HeartSteps, online education, and personalized recommendations.

arXiv: 2410.14659

October 18, 2024

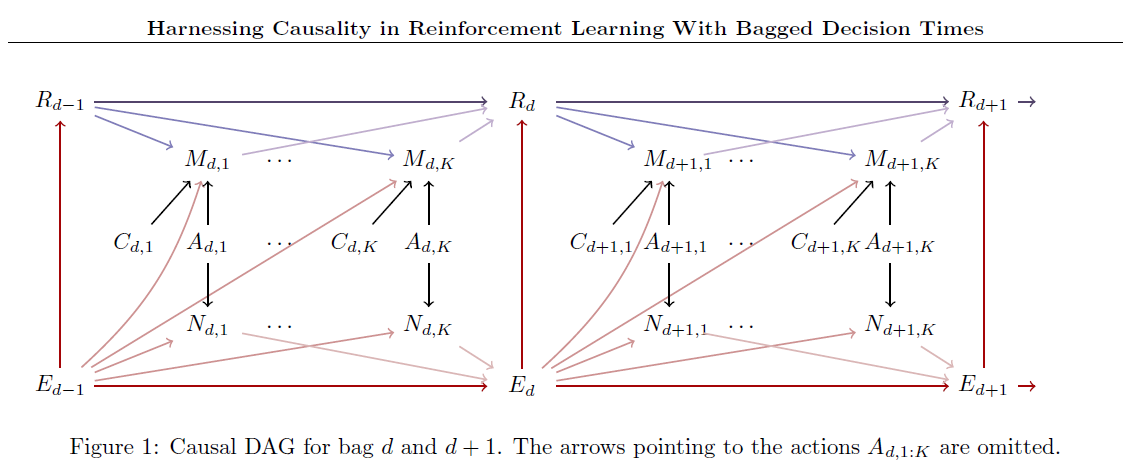

Reinforcement Learning (RL), Bagged Decision Times, Causal Directed Acyclic Graph (DAG), Non-Markovian, Non-stationary, Periodic Markov Decision Process (MDP), Dynamical Bayesian Sufficient Statistic (D-BaSS), Mobile Health (mHealth), HeartSteps, Online RL, Bellman Equations, Randomized Least-Squares Value Iteration (RLSVI), State Construction, Mediators.

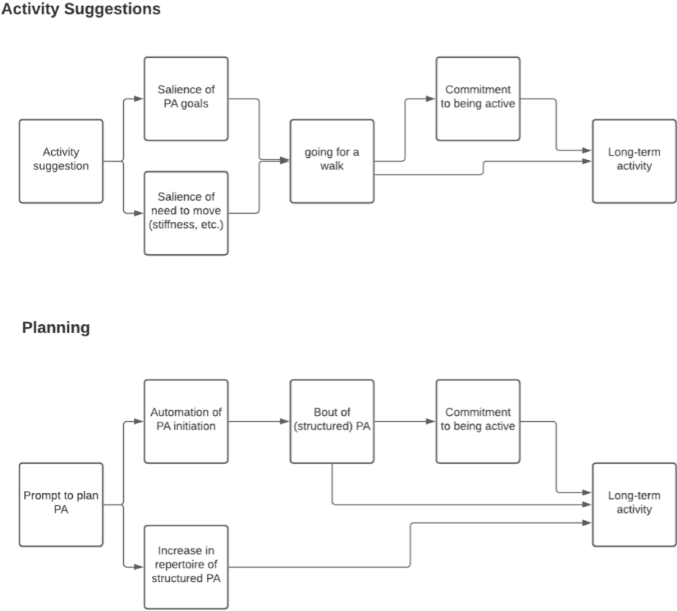

We consider reinforcement learning (RL) for a class of problems with bagged decision times. A bag contains a finite sequence of consecutive decision times. The transition dynamics are non-Markovian and non-stationary within a bag. All actions within a bag jointly impact a single reward, observed at the end of the bag. For example, in mobile health, multiple activity suggestions in a day collectively affect a user’s daily commitment to being active. Our goal is to develop an online RL algorithm to maximize the discounted sum of the bag-specific rewards. To handle non-Markovian transitions within a bag, we utilize an expert-provided causal directed acyclic graph (DAG). Based on the DAG, we construct states as a dynamical Bayesian sufficient statistic of the observed history, which results in Markov state transitions within and across bags. We then formulate this problem as a periodic Markov decision process (MDP) that allows non-stationarity within a period. An online RL algorithm based on Bellman equations for stationary MDPs is generalized to handle periodic MDPs. We show that our constructed state achieves the maximal optimal value function among all state constructions for a periodic MDP. Finally, we evaluate the proposed method on testbed variants built from real data in a mobile health clinical trial.

This publication introduces a novel Reinforcement Learning (RL) framework designed for scenarios with “bagged decision times,” where a sequence of actions within a finite period (a “bag”) jointly influences a single reward observed at the end of that period. Unlike traditional RL that often assumes Markovian and stationary transitions, this work addresses non-Markovian and non-stationary dynamics within a bag. The core innovation lies in leveraging an expert-provided causal Directed Acyclic Graph (DAG) to construct states (specifically, a dynamical Bayesian sufficient statistic) that ensure Markovian transitions both within and across bags. This allows the problem to be formulated as a periodic Markov Decision Process (MDP), generalizing existing RL algorithms based on Bellman equations. The proposed online RL algorithm, Bagged RLSVI, is shown to achieve maximal optimal value functions and is evaluated effectively on testbed variants built from real mobile health (mHealth) data (HeartSteps clinical trial), demonstrating its robustness even with misspecified causal assumptions. The research highlights the significant role of mediators in improving the optimal value function.

Nature

NPJ Digital Medicine

September 14, 2023

mHealth, Cardiac rehabilitation (CR), Randomized clinical trial, Just-in-time adaptive intervention (JITAI), Smartwatch, Smartphone, Physical activity, 6-minute walk test, Cardiovascular disease, Text messages, Mobile application.

Mobile health (mHealth) interventions may enhance positive health behaviors, but randomized trials evaluating their efficacy are uncommon. Our goal was to determine if a mHealth intervention augmented and extended benefits of center-based cardiac rehabilitation (CR) for physical activity levels at 6-months. We delivered a randomized clinical trial to low and moderate risk patients with a compatible smartphone enrolled in CR at two health systems. All participants received a compatible smartwatch and usual CR care. Intervention participants received a mHealth intervention that included a just-in-time-adaptive intervention (JITAI) as text messages. The primary outcome was change in remote 6-minute walk distance at 6-months stratified by device type. Here we report the results for 220 participants enrolled in the study (mean [SD]: age 59.6 [10.6] years; 67 [30.5%] women). For our primary outcome at 6 months, there is no significant difference in the change in 6 min walk distance across smartwatch types (Intervention versus control: +31.1 meters Apple Watch, −7.4 meters Fitbit; p = 0.28). Secondary outcomes show no difference in mean step counts between the first and final weeks of the study, but a change in 6 min walk distance at 3 months for Fitbit users. Amongst patients enrolled in center-based CR, a mHealth intervention did not improve 6-month outcomes but suggested differences at 3 months in some users.

This randomized clinical trial, named the VALENTINE Study, investigated whether a mobile health (mHealth) intervention—consisting of a smartphone application and contextually tailored text messages delivered via smartwatches—could augment and extend the benefits of center-based cardiac rehabilitation (CR) by improving physical activity levels over 6 months. The study enrolled 220 low and moderate risk CR patients who were provided with either an Apple Watch or Fitbit. The primary outcome, change in remote 6-minute walk distance at 6-months, showed no significant difference between the intervention and control groups. While there were suggestive differences at 3 months for Fitbit users regarding 6-minute walk distance, the intervention did not achieve its goal of sustained long-term impact on physical activity.

Biometrika

binary outcome, causal excursion effect, causal inference, longitudinal data, micro-randomized trials, mobile health, relative risk, semiparametric efficiency theory

September 2021

We develop an estimator that can be used as the basis of a primary aim analysis under more plausible assumptions. Simulation studies are conducted to compare the estimators. We illustrate the developed methods using data from the MRT, BariFit. In BariFit, the goal is to support weight maintenance for individuals who received bariatric surgery.

Transactions on Machine Learning Research

November 2023

Online model selection, compositional kernels, Gaussian Process regression, multi-task learning, mobile health (mHealth), Kernel Evolution Model (KEM), personalized learning, bias-variance trade-off, sparsity, stability, adaptive complexity, Dirichlet Process, Chinese Restaurant Process, kernel evolution.

Motivated by the need for efficient, personalized learning in mobile health, we investigate the problem of online compositional kernel selection for multi-task Gaussian Process regression. Existing composition selection methods do not satisfy our strict criteria in health; selection must occur quickly, and the selected kernels must maintain the appropriate level of complexity, sparsity, and stability as data arrives online. We introduce the Kernel Evolution Model (KEM), a generative process on how to evolve kernel compositions in a way that manages the bias-variance trade-off as we observe more data about a user. Using pilot data, we learn a set of kernel evolutions that can be used to quickly select kernels for new test users. KEM reliably selects high-performing kernels for a range of synthetic and real data sets, including two health data sets.

This publication introduces the Kernel Evolution Model (KEM), an innovative approach for online compositional kernel selection in multi-task Gaussian Process regression, specifically designed for mobile health (mHealth) applications. KEM addresses the critical need for efficient, personalized learning by training on pilot data to learn how kernel compositions should evolve over time. This allows KEM to quickly select high-performing kernels for new users that are sparse, stable, and possess adaptive complexity, effectively managing the bias-variance trade-off, especially in low-data scenarios.

Machine Learning, Volume 110, Pages 2685–2727

Thompson-Sampling, mobile health, reinforcement learning

September 2021

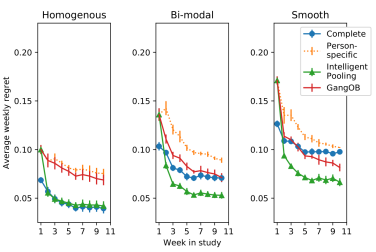

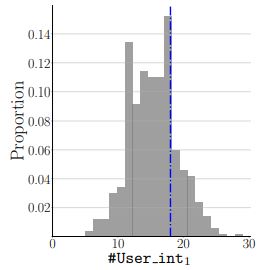

In mobile health (mHealth) smart devices deliver behavioral treatments repeatedly over time to a user with the goal of helping the user adopt and maintain healthy behaviors. Reinforcement learning appears ideal for learning how to optimally make these sequential treatment decisions. However, significant challenges must be overcome before reinforcement learning can be effectively deployed in a mobile healthcare setting. In this work we are concerned with the following challenges: 1) individuals who are in the same context can exhibit differential response to treatments 2) only a limited amount of data is available for learning on any one individual, and 3) non-stationary responses to treatment. To address these challenges we generalize Thompson-Sampling bandit algorithms to develop IntelligentPooling. IntelligentPooling learns personalized treatment policies thus addressing challenge one. To address the second challenge, IntelligentPooling updates each user’s degree of personalization while making use of available data on other users to speed up learning. Lastly, IntelligentPooling allows responsivity to vary as a function of a user’s time since beginning treatment, thus addressing challenge three. We show that IntelligentPooling achieves an average of 26% lower regret than state-of-the-art. We demonstrate the promise of this approach and its ability to learn from even a small group of users in a live clinical trial.

To address the significant challenges that must be overcome before reinforcement learning can be deployed in a mobile healthcare setting, we develop IntelligentPooling by generalizing Thompson-Sampling bandit algorithms.

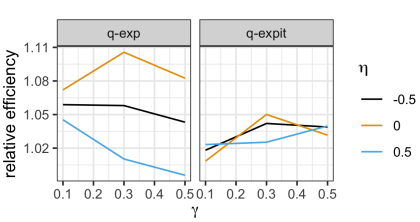

arXiv:2306.10983

June 27, 2023

effect-invariant mechanisms, policy generalization, machine learning

The Annals of Statistics

December 21, 2022

average reward, doubly robust estimator, Markov Decision Process, policy optimization

We consider the batch (off-line) policy learning problem in the infinite horizon Markov decision process. Motivated by mobile health applications, we focus on learning a policy that maximizes the long-term average reward. We propose a doubly robust estimator for the average reward and show that it achieves semiparametric efficiency. Further, we develop an optimization algorithm to compute the optimal policy in a parameterized stochastic policy class. The performance of the estimated policy is measured by the difference between the optimal average reward in the policy class and the average reward of the estimated policy and we establish a finite-sample regret guarantee. The performance of the method is illustrated by simulation studies and an analysis of a mobile health study promoting physical activity.

We consider batch policy learning in an infinite horizon Markov Decision Process, focusing on optimizing a policy for long-term average reward in the context of mobile health applications.

IEEE International Conference on Digital Health (ICDH)

July 10, 2022

learning systems, optimized production technology, behavioral sciences, electronic healthcare, decision trees

To promote healthy behaviors, many mobile health applications provide message-based interventions, such as tips, motivational messages, or suggestions for healthy activities. Ideally, the intervention policies should be carefully designed so that users obtain the benefits without being overwhelmed by overly frequent messages. As part of the HeartSteps physical-activity intervention, users receive messages intended to disrupt sedentary behavior. HeartSteps uses an algorithm to uniformly spread out the daily message budget over time, but does not attempt to maximize treatment effects. This limitation motivates constructing a policy to optimize the message delivery decisions for more effective treatments. Moreover, the learned policy needs to be interpretable to enable behavioral scientists to examine it and to inform future theorizing. We address this problem by learning an effective and interpretable policy that reduces sedentary behavior. We propose Optimal Policy Trees + (OPT+), an innovative batch off-policy learning method, that combines a personalized threshold learning and an extension of Optimal Policy Trees under a budget-constrained setting. We implement and test the method using data collected in HeartSteps V2N3. Computational results demonstrate a significant reduction in sedentary behavior with a lower delivery budget. OPT + produces a highly interpretable and stable output decision tree thus enabling theoretical insights to guide future research.

Online RL faces challenges like real-time stability and handling complex, unpredictable environments; to address these issues, the PCS framework originally used in supervised learning is extended to guide the design of RL algorithms for such settings, including guidelines for creating simulation environments, as exemplified in the development of an RL algorithm for the mobile health study Oralytics aimed at enhancing tooth-brushing behaviors through personalized intervention messages.

arXiv:2304.05365v6

August 7, 2023

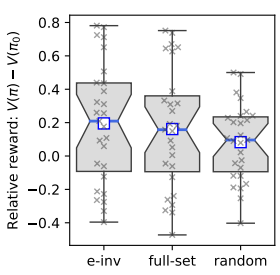

reinforcement learning, personalization, resampling, exploratory data analysis, mobile health

There is a growing interest in using reinforcement learning (RL) to personalize sequences of treatments in digital health to support users in adopting healthier behaviors. Such sequential decision-making problems involve decisions about when to treat and how to treat based on the user’s context (e.g., prior activity level, location, etc.). Online RL is a promising data-driven approach for this problem as it learns based on each user’s historical responses and uses that knowledge to personalize these decisions. However, to decide whether the RL algorithm should be included in an “optimized” intervention for real-world deployment, we must assess the data evidence indicating that the RL algorithm is actually personalizing the treatments to its users. Due to the stochasticity in the RL algorithm, one may get a false impression that it is learning in certain states and using this learning to provide specific treatments. We use a working definition of personalization and introduce a resampling-based methodology for investigating whether the personalization exhibited by the RL algorithm is an artifact of the RL algorithm stochasticity. We illustrate our methodology with a case study by analyzing the data from a physical activity clinical trial called HeartSteps, which included the use of an online RL algorithm. We demonstrate how our approach enhances data-driven truth-in-advertising of algorithm personalization both across all users as well as within specific users in the study.

We use a working definition of personalization and introduce a resampling-based methodology for investigating whether the personalization exhibited by the RL algorithm is an artifact of the RL algorithm stochasticity.

Psychological Methods

January 13, 2022

Conference on Uncertainty in Artificial Intelligence (UAI 2023)

May 17, 2023

reinforcement learning, partial observability, context inference, adaptive interventions, empirical evaluation, mobile health

Just-in-Time Adaptive Interventions (JITAIs) are a class of personalized health interventions developed within the behavioral science community. JITAIs aim to provide the right type and amount of support by iteratively selecting a sequence of intervention options from a pre-defined set of components in response to each individual’s time varying state. In this work, we explore the application of reinforcement learning methods to the problem of learning intervention option selection policies. We study the effect of context inference error and partial observability on the ability to learn effective policies. Our results show that the propagation of uncertainty from context inferences is critical to improving intervention efficacy as context uncertainty increases, while policy gradient algorithms can provide remarkable robustness to partially observed behavioral state information.

This work focuses on JITAIs, personalized health interventions that dynamically select support components based on an individual’s changing state. The study applies reinforcement learning methods to learn policies for selecting intervention options, revealing that uncertainty from context inferences is crucial for enhancing intervention efficacy as context uncertainty increases.

Statistical Science: a review journal of the Institute of Mathematical Statistics

causal inference, endogenous covariates, linear mixed model, micro-randomized trial

October 2020

Mobile health is a rapidly developing field in which behavioral treatments are delivered to individuals via wearables or smartphones to facilitate health-related behavior change. Micro-randomized trials (MRT) are an experimental design for developing mobile health interventions. In an MRT the treatments are randomized numerous times for each individual over course of the trial. Along with assessing treatment effects, behavioral scientists aim to understand between-person heterogeneity in the treatment effect. A natural approach is the familiar linear mixed model. However, directly applying linear mixed models is problematic because potential moderators of the treatment effect are frequently endogenous-that is, may depend on prior treatment. We discuss model interpretation and biases that arise in the absence of additional assumptions when endogenous covariates are included in a linear mixed model. In particular, when there are endogenous covariates, the coefficients no longer have the customary marginal interpretation. However, these coefficients still have a conditional-on-the-random-effect interpretation. We provide an additional assumption that, if true, allows scientists to use standard software to fit linear mixed model with endogenous covariates, and person-specific predictions of effects can be provided. As an illustration, we assess the effect of activity suggestion in the HeartSteps MRT and analyze the between-person treatment effect heterogeneity.

We discuss model interpretation and biases that arise in the absence of additional assumptions when endogenous covariates are included in a linear mixed model. In particular, when there are endogenous covariates, the coefficients no longer have the customary marginal interpretation.

Journal of the American Statistical Association

sequential decision making, policy evaluation, markov decision process, reinforcement learning

2021

In this paper, we provide an approach for conducting inference about the performance of one or more such policies using historical data collected under a possibly different policy.

You must be logged in to post a comment.

No Comments