The mDOT Center

Transforming health and wellness via temporally-precise mHealth interventions

mDOT@MD2K.org

901.678.1526

901.678.1526

Collaborating Investigator:

Dr. Nabil Alshurafa, Northwestern University

Funding Status:

NIH/NIDDK

1/1/18 – 11/30/22

Associated with:

Yun C Zhang, Shibo Zhang, Miao Liu, Elyse Daly, Samuel Battalio, Santosh Kumar, Bonnie Spring, James M Rehg, Nabil Alshurafa

Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies

September 4, 2024

Accelerometry, Automatic Synchronization, Temporal Drift, Time Synchronization, Video, Wearable Camera, Wearable Sensor. Additional relevant keywords from the index terms include Human-centered computing, Ubiquitous and mobile computing, Ubiquitous and mobile computing design and evaluation methods, and Ubiquitous and mobile computing systems and tools.

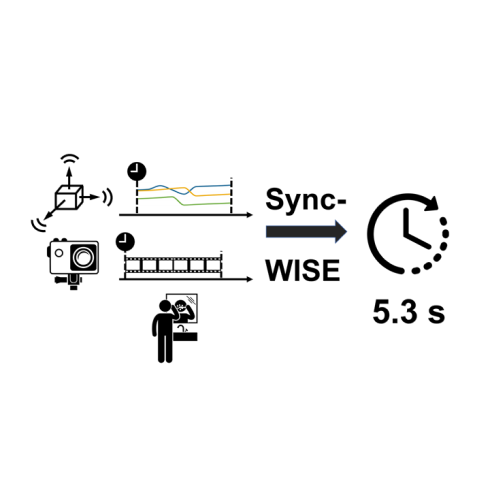

The development and validation of computational models to detect daily human behaviors (e.g., eating, smoking, brushing) using wearable devices requires labeled data collected from the natural field environment, with tight time synchronization of the micro-behaviors (e.g., start/end times of hand-to-mouth gestures during a smoking puff or an eating gesture) and the associated labels. Video data is increasingly being used for such label collection. Unfortunately, wearable devices and video cameras with independent (and drifting) clocks make tight time synchronization challenging. To address this issue, we present the Window Induced Shift Estimation method for Synchronization (SyncWISE) approach. We demonstrate the feasibility and effectiveness of our method by synchronizing the timestamps of a wearable camera and wearable accelerometer from 163 videos representing 45.2 hours of data from 21 …

SyncWISE is a novel method for accurately synchronizing data from wearable cameras and accelerometers, crucial for developing computational models to detect daily human behaviors from natural field environments. It addresses the challenge of independent and drifting clocks between devices, demonstrating significant improvement over existing methods even with high data loss, achieving 90% synchronization accuracy within a 700-millisecond tolerance.

Obesity, caused primarily by overeating relative to need, is a preventable chronic disease that exacts staggering healthcare costs. SP4 aims to identify eating patterns via wearable sensor data that characterize episodes of excess calorie intake. This approach may facilitate detection of known (e.g., emotional eating, impulsive eating in response to cues, hedonic eating) and novel eating phenotypes that have different treatment implications. Being able to detect overeating objectively and passively, learning to predict it, and then intervene in a manner that adapts to an individual’s problematic overeating profile paves the way toward personalized behavioral medicine interventions. In particular, the promise of real time sensing combined with machine-learning based detection models is the ability to measure eating objectively (minimizing self-report bias), precisely (with high sensitivity and specificity), passively (without burden or disruption), and dynamically (detecting rapidly changing states).

However, one of the primary obstacles to carrying out this work is the need to obtain high-quality labeled data regarding when eating episodes occur so that supervised machine learning methods can be used to detect the occurrence of eating. To this end, SP3’s research focuses on simultaneous collection of multi-modal sensor data along with video data from a body-worn camera. The advantage of this approach is that the video data can be synchronized with the sensor data and labeled post-hoc by trained study personnel, resulting is accurate labels for learning eating detection models. The disadvantage of this approach is that it requires extensive effort on the part of the study personnel to review and annotate many hours of data from each study subject.

To help reduce the effort required to accurately label data, Dr. Alshurafa will integrate uncertainty modeling methods produced by TR&D1 Aim1 into a graphical interface for video-driven labeling that his team is developing. Models provided by TR&D1 will be iteratively learned as data are labeled. To help speed the labeling process, the learned model at any stage can be used to determine unlabeled regions of video with the highest predicted label uncertainty and annotators can focus their labeling efforts on these regions. This is a form of generalized active learning where uncertainty models are used to issue suggestions for regions of interest that annotators can then refine as needed to ensure that SP3’s requirements for precise segmentation and labeling are met.

You must be logged in to post a comment.

No Comments